Computer structure pipeline презентация

Содержание

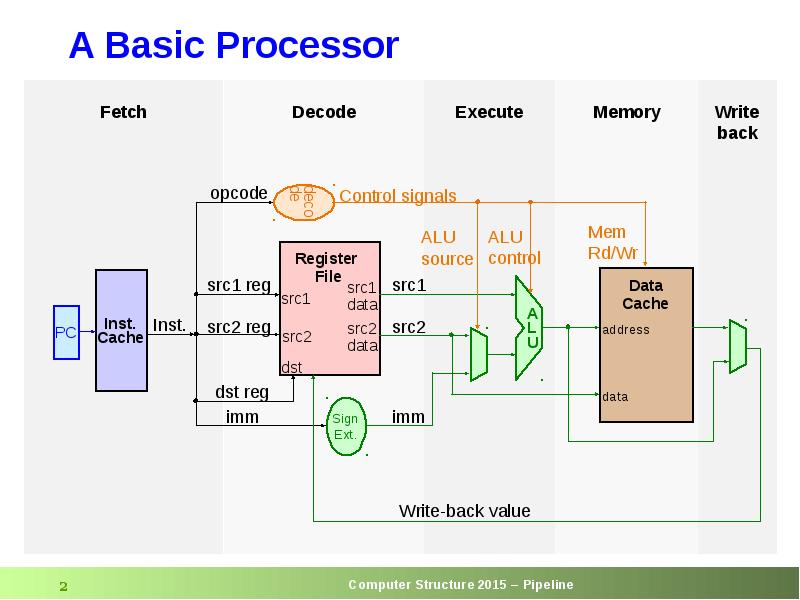

- 2. A Basic Processor

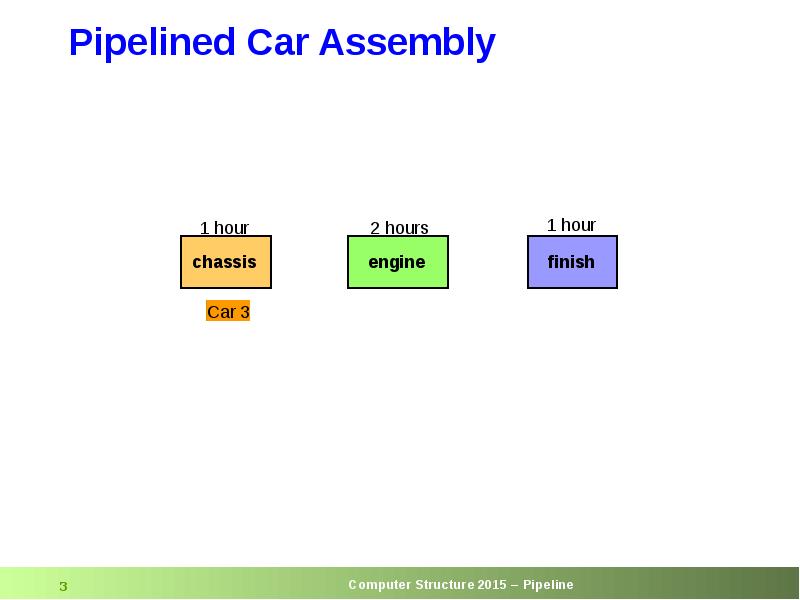

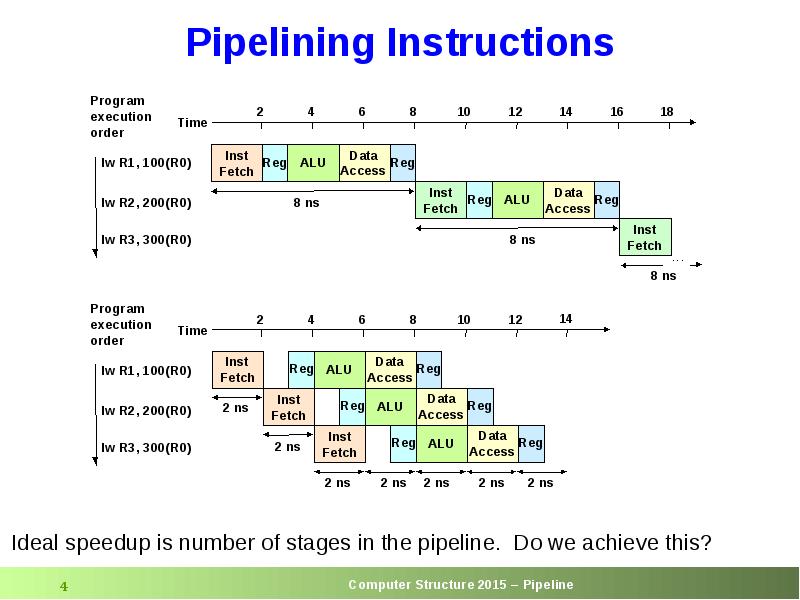

- 3. Pipelined Car Assembly

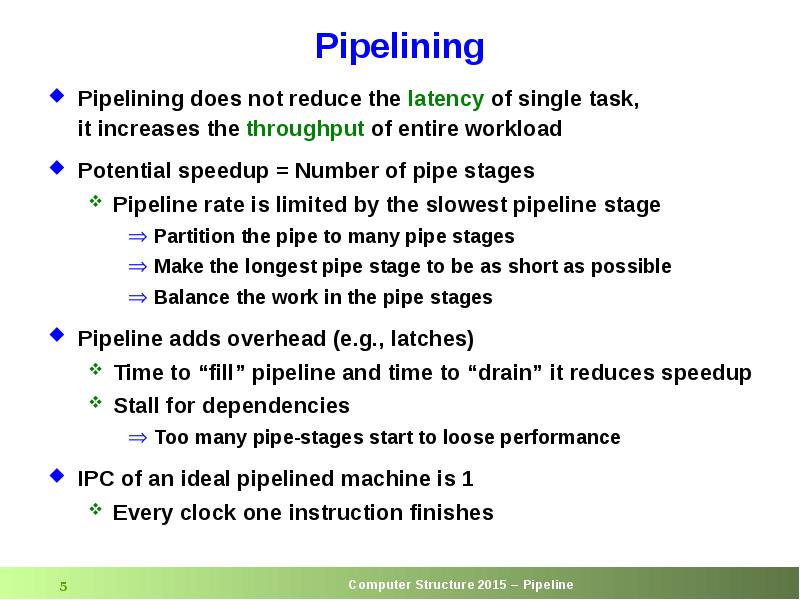

- 5. Pipelining Pipelining does not reduce the latency of single task, it

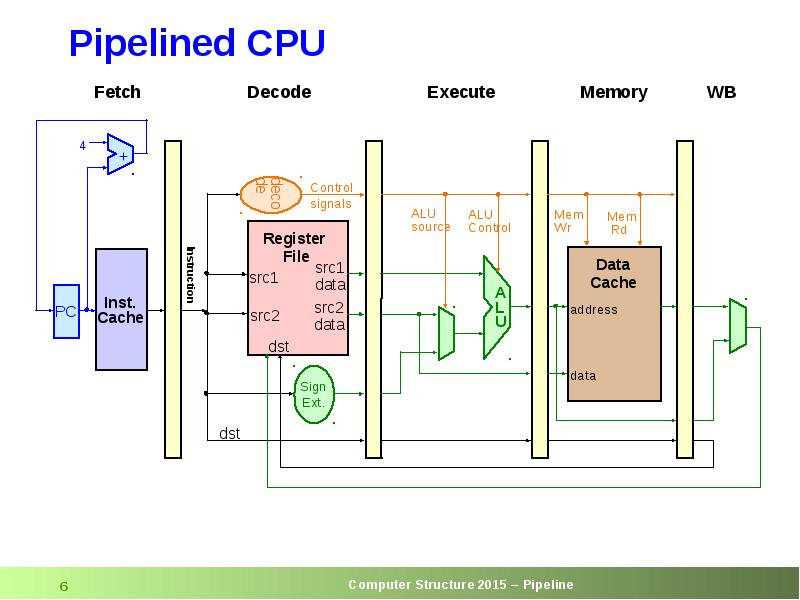

- 6. Pipelined CPU

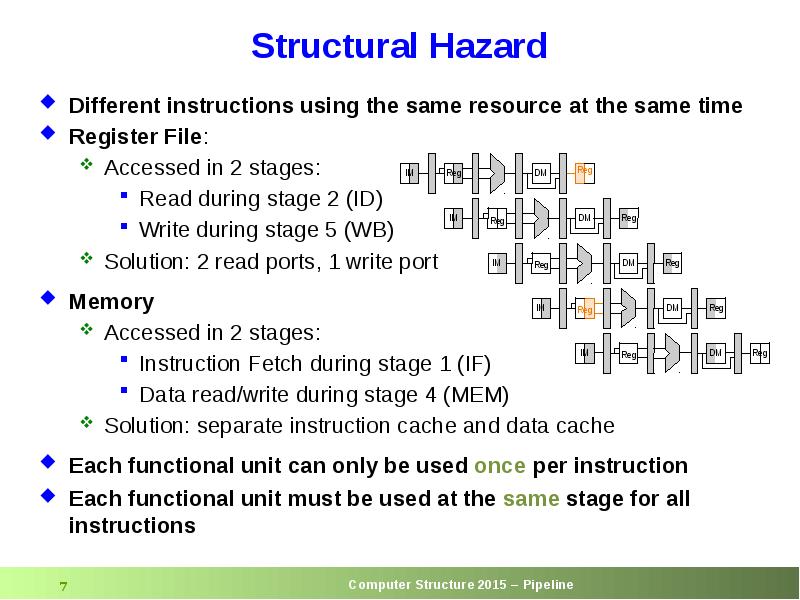

- 7. Structural Hazard Different instructions using the same resource at the same

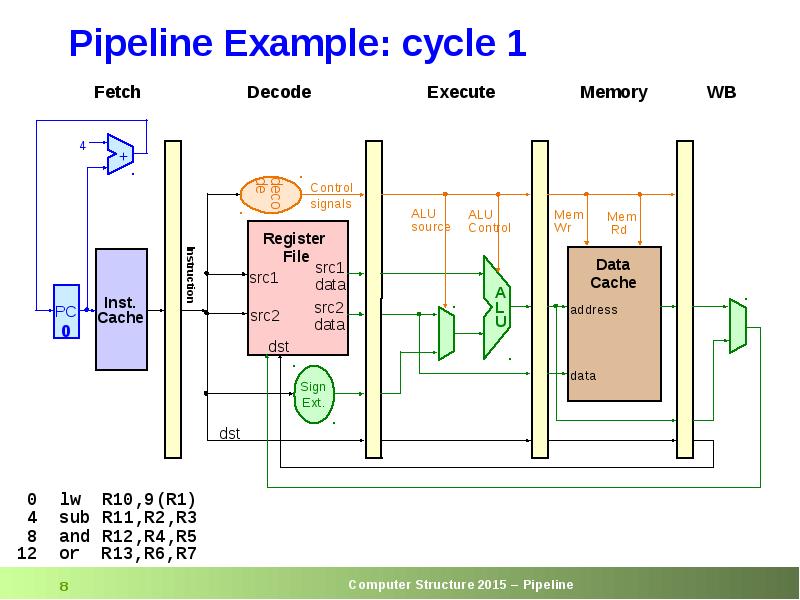

- 8. Pipeline Example: cycle 1

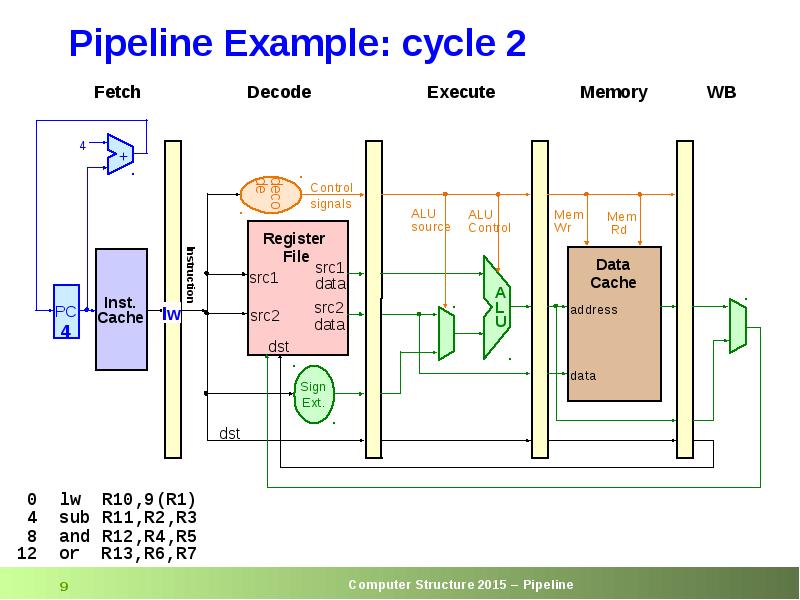

- 9. Pipeline Example: cycle 2

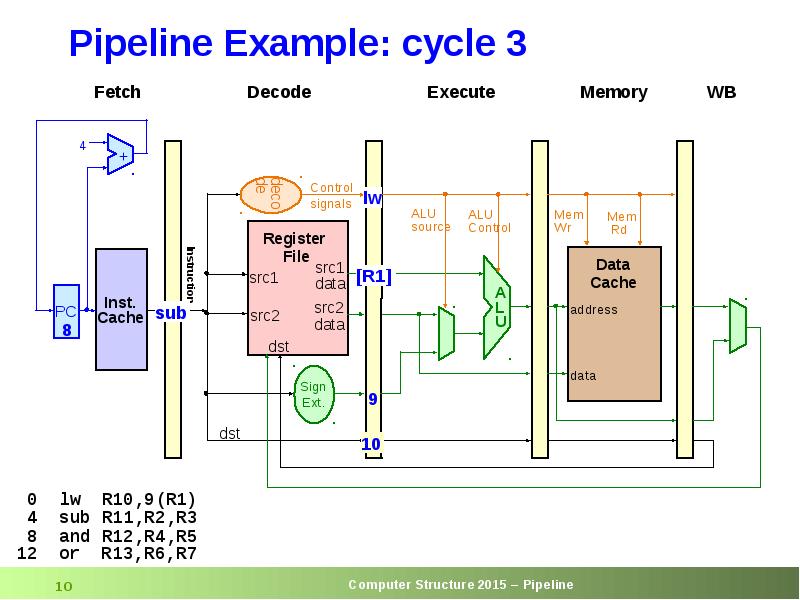

- 10. Pipeline Example: cycle 3

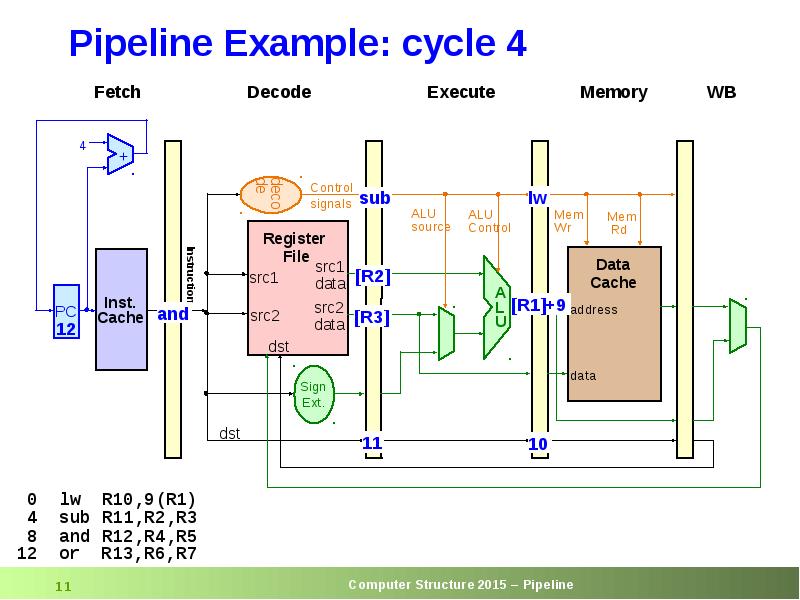

- 11. Pipeline Example: cycle 4

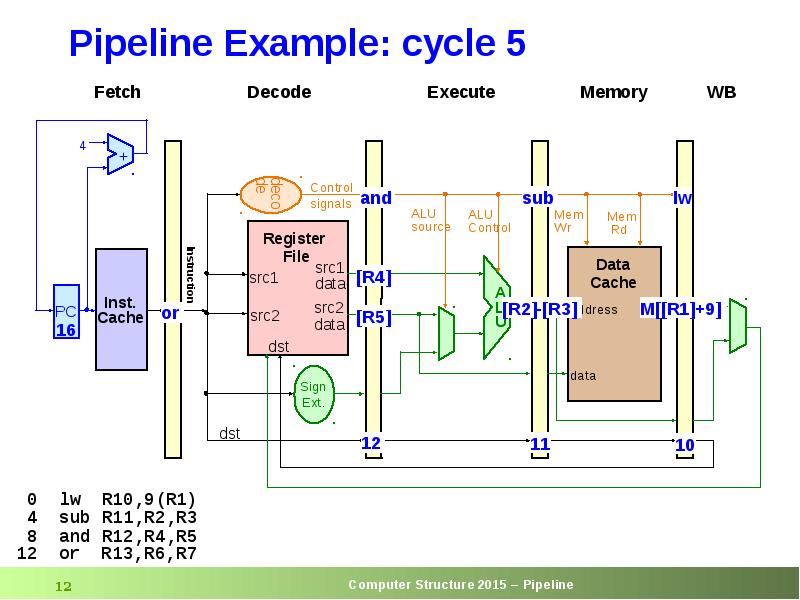

- 12. Pipeline Example: cycle 5

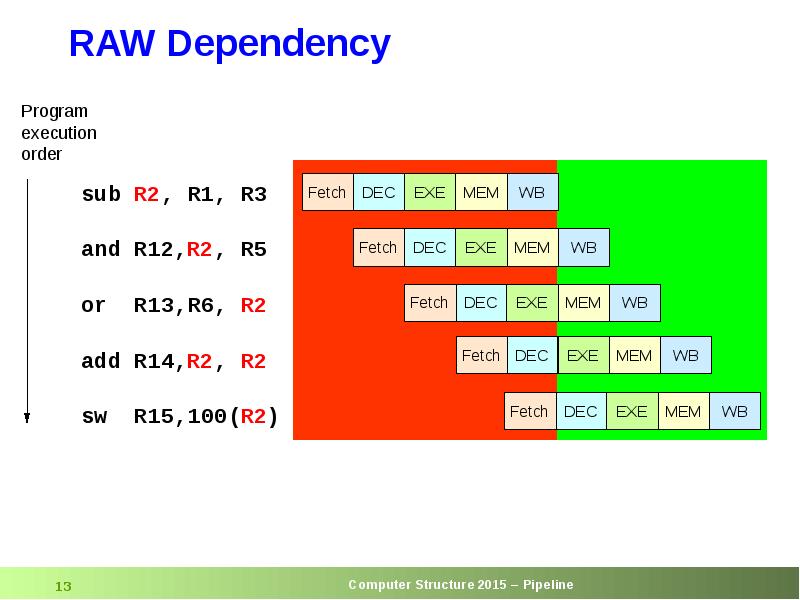

- 13. RAW Dependency

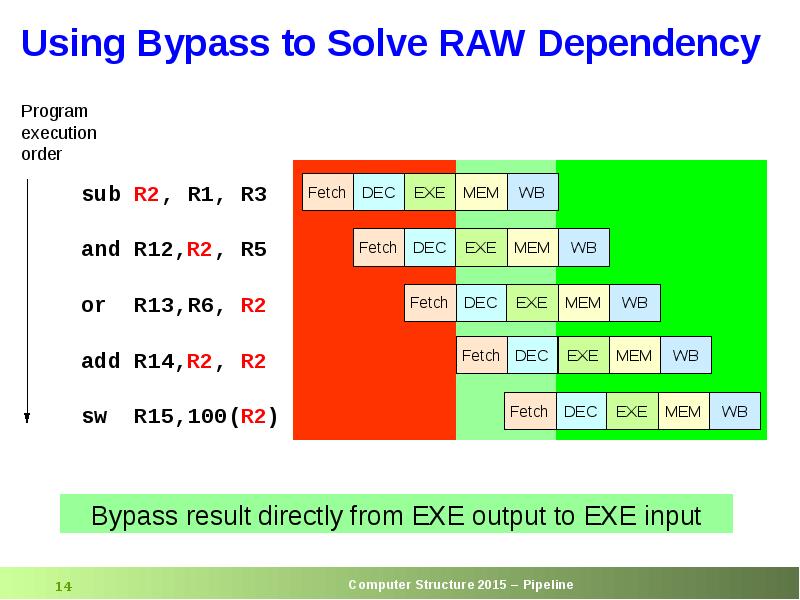

- 14. Using Bypass to Solve RAW Dependency

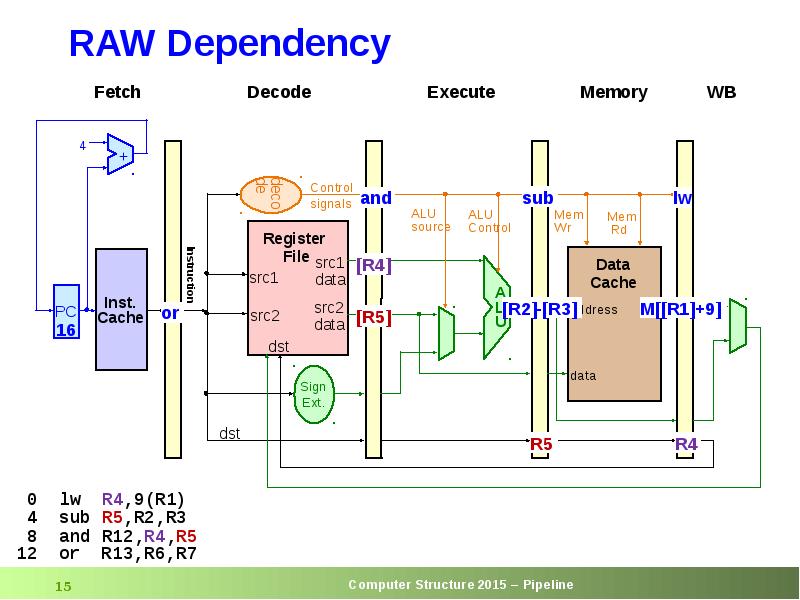

- 15. RAW Dependency

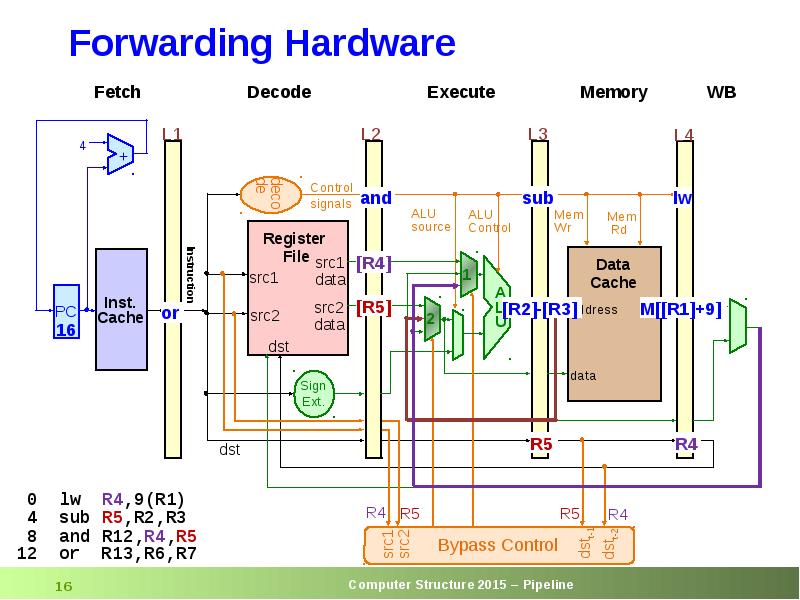

- 16. Forwarding Hardware

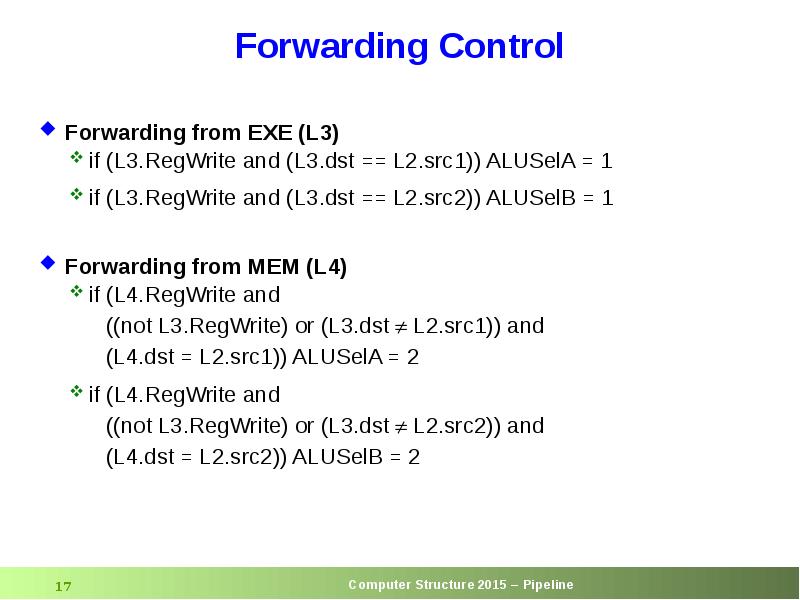

- 17. Forwarding Control Forwarding from EXE (L3) if (L3.RegWrite and (L3.dst ==

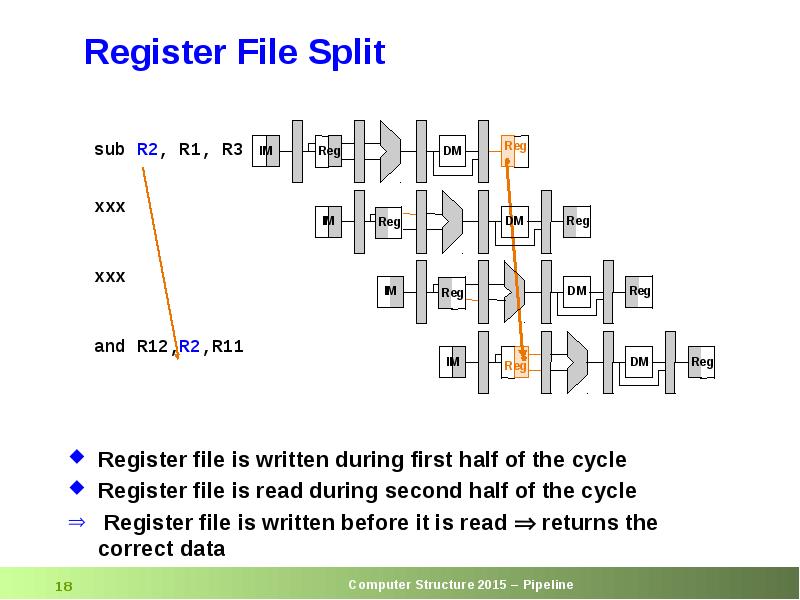

- 18. Register File Split Register file is written during first half of

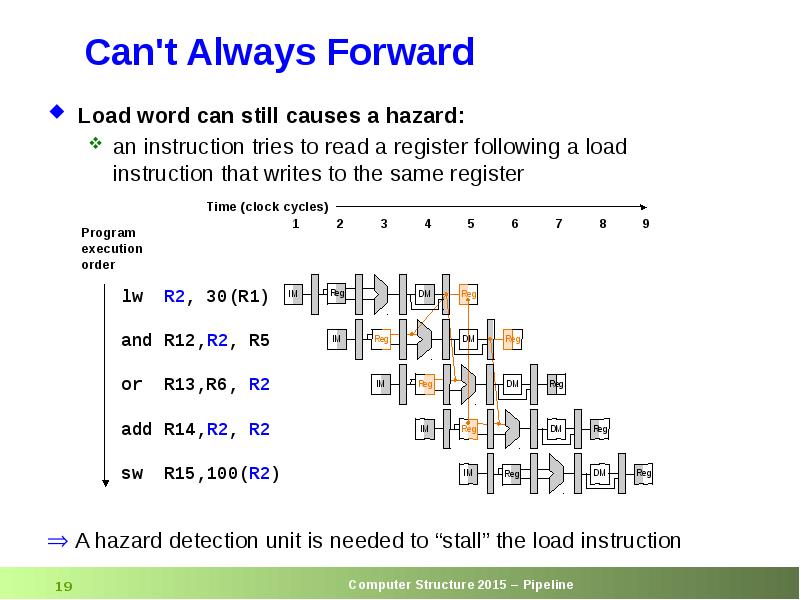

- 19. Can't Always Forward

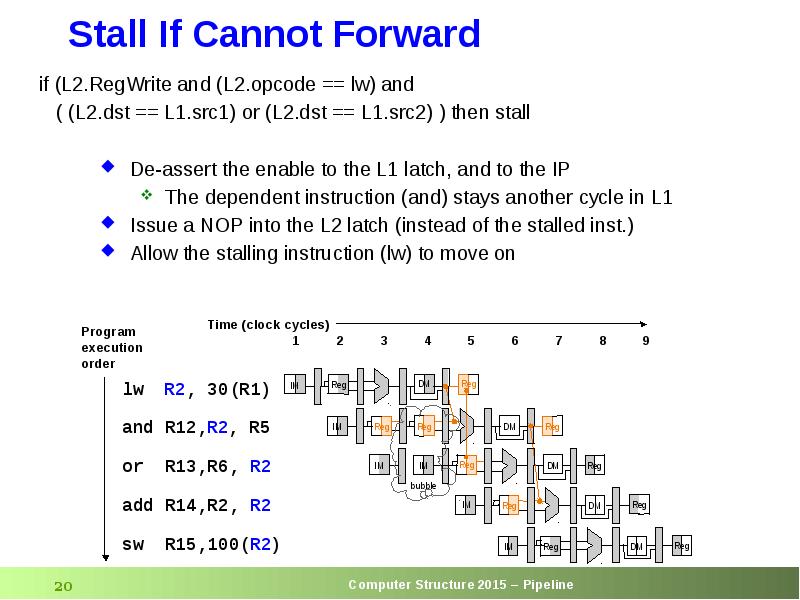

- 20. Stall If Cannot Forward

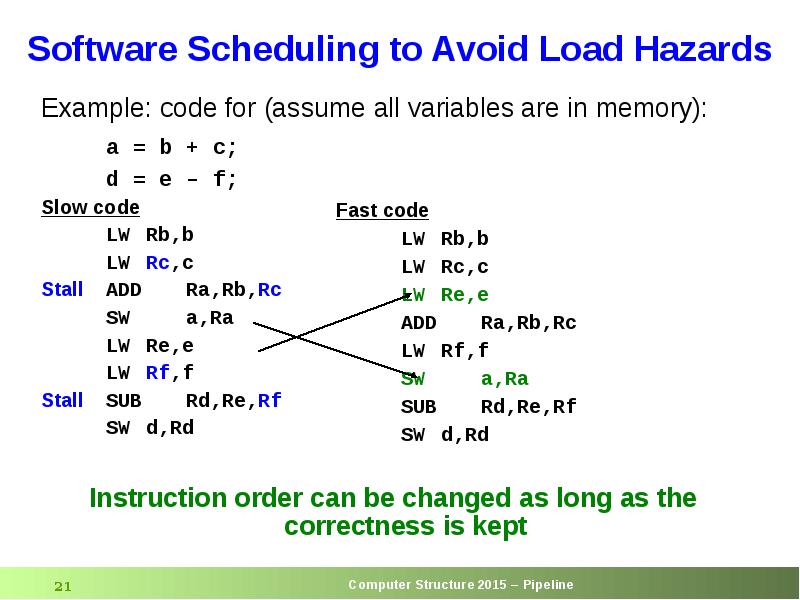

- 21. Software Scheduling to Avoid Load Hazards Fast code LW Rb,b LW

- 22. Control Hazards

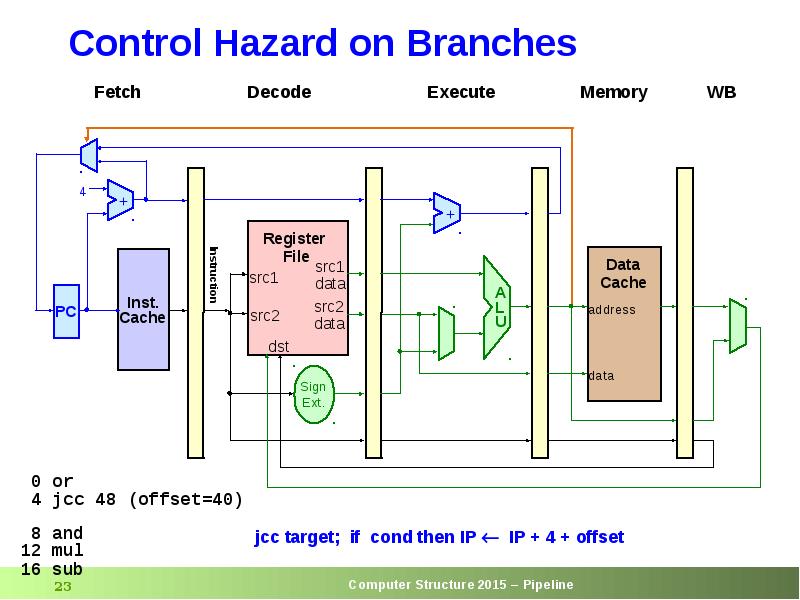

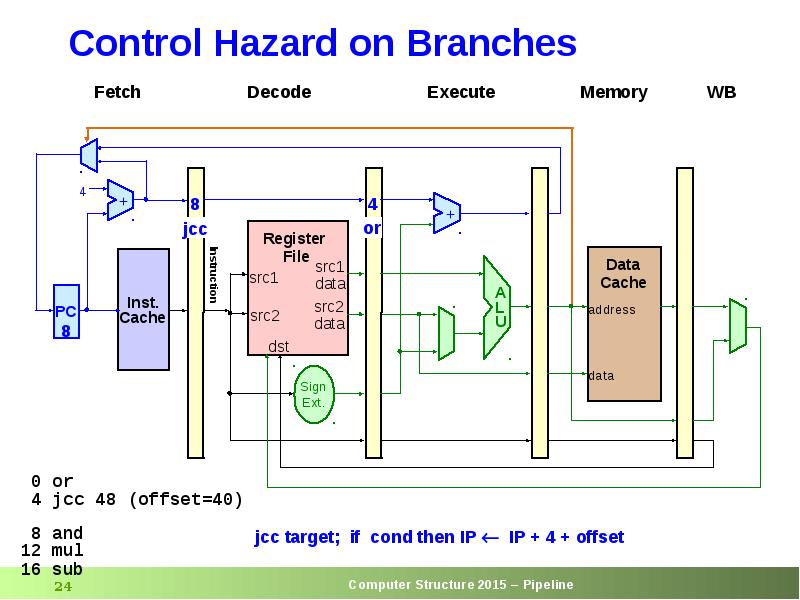

- 23. Control Hazard on Branches

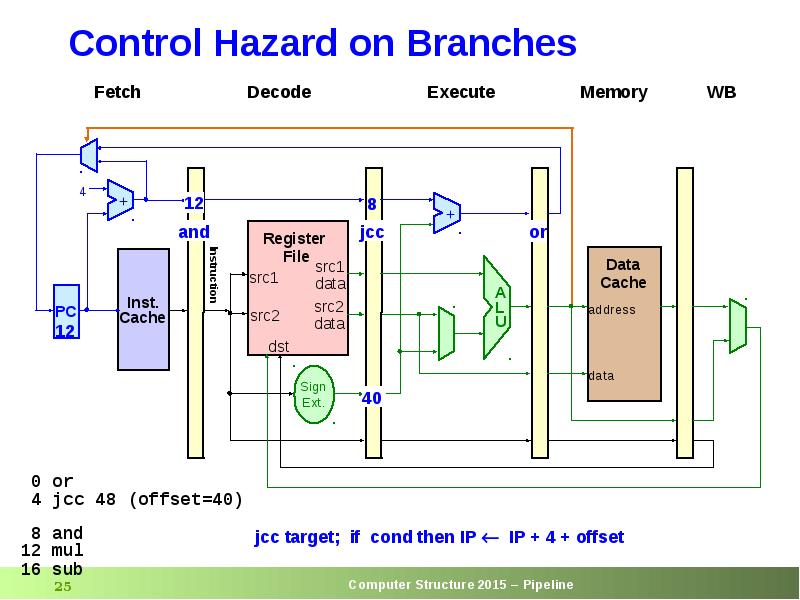

- 24. Control Hazard on Branches

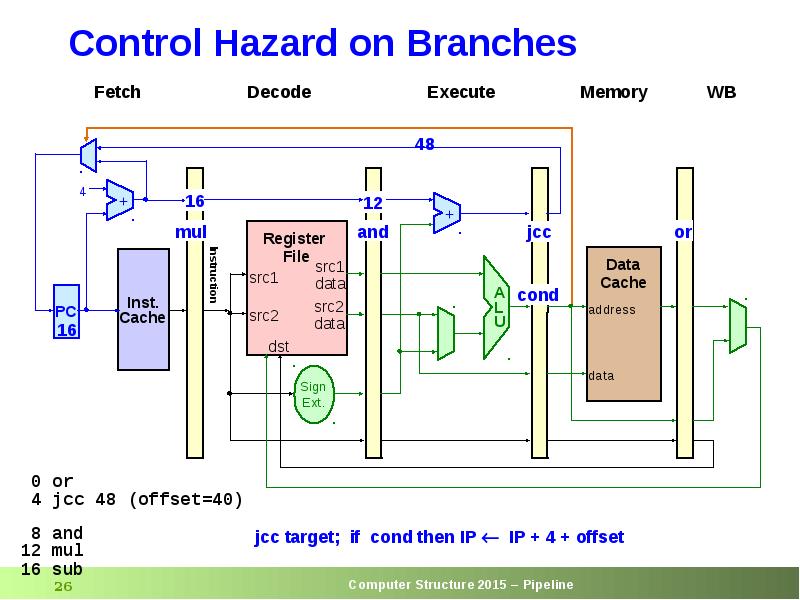

- 25. Control Hazard on Branches

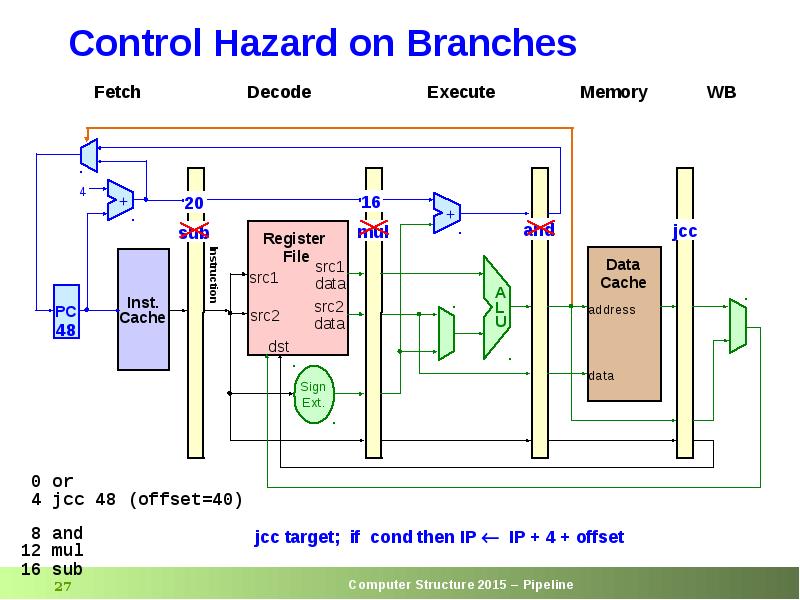

- 26. Control Hazard on Branches

- 27. Control Hazard on Branches

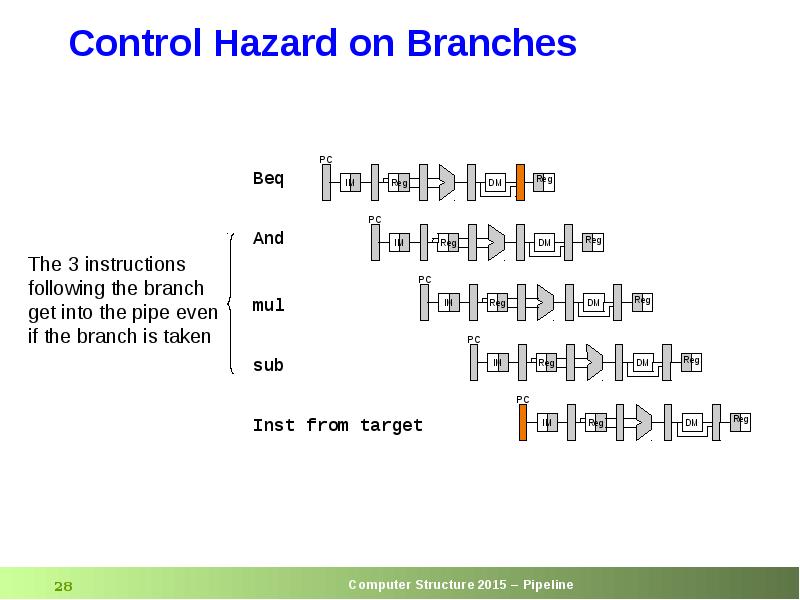

- 28. Control Hazard on Branches

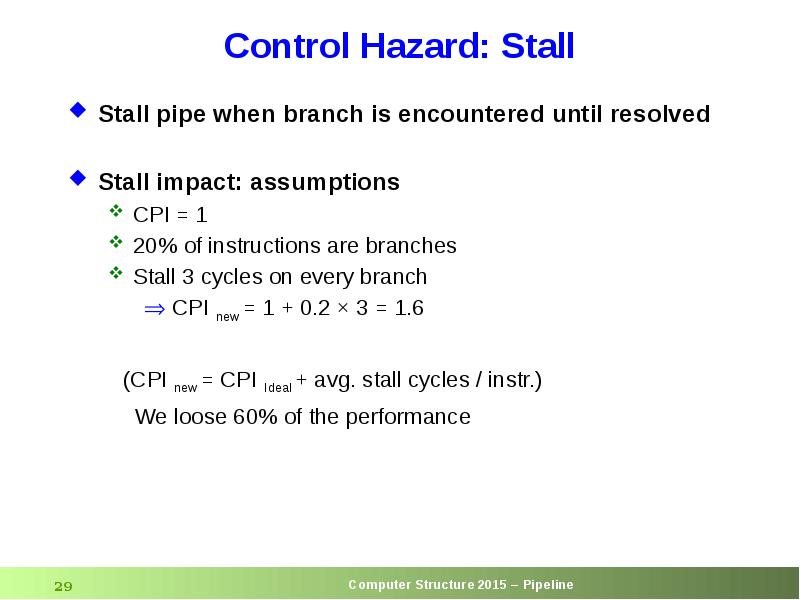

- 29. Control Hazard: Stall Stall pipe when branch is encountered until resolved

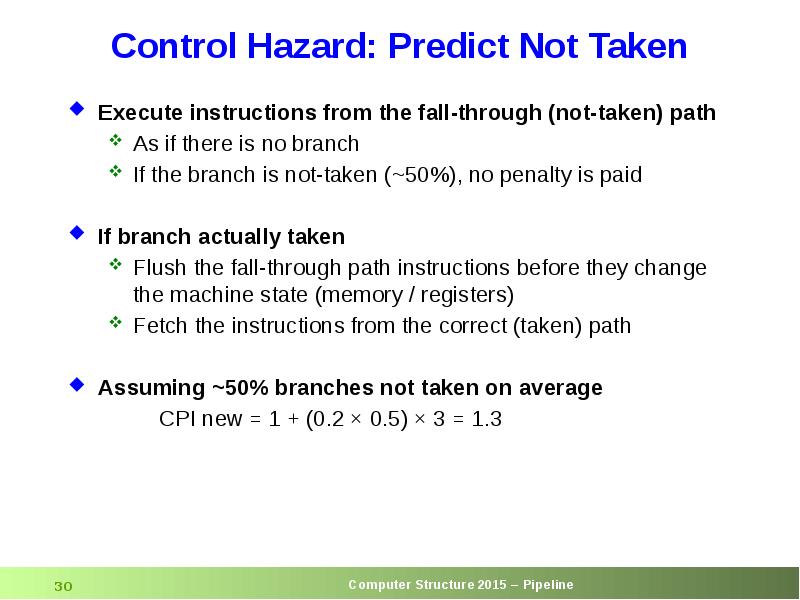

- 30. Control Hazard: Predict Not Taken Execute instructions from the fall-through (not-taken)

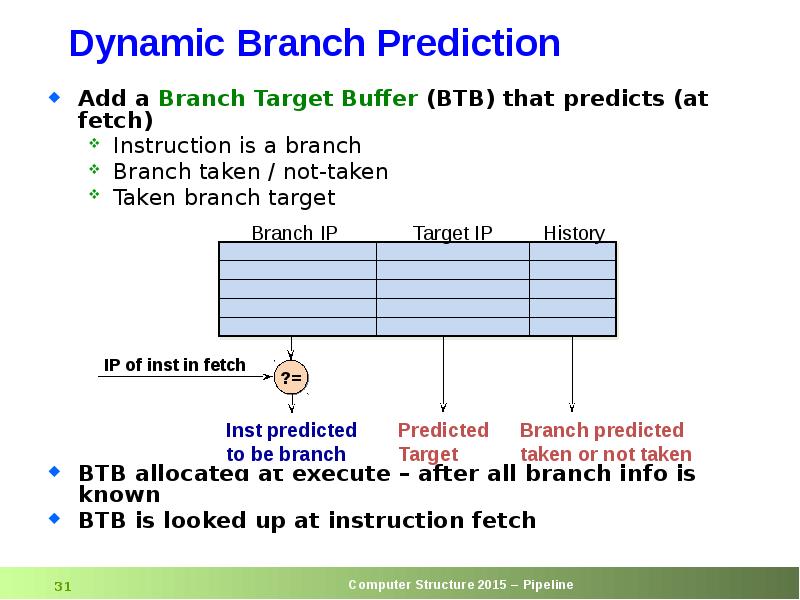

- 31. Dynamic Branch Prediction

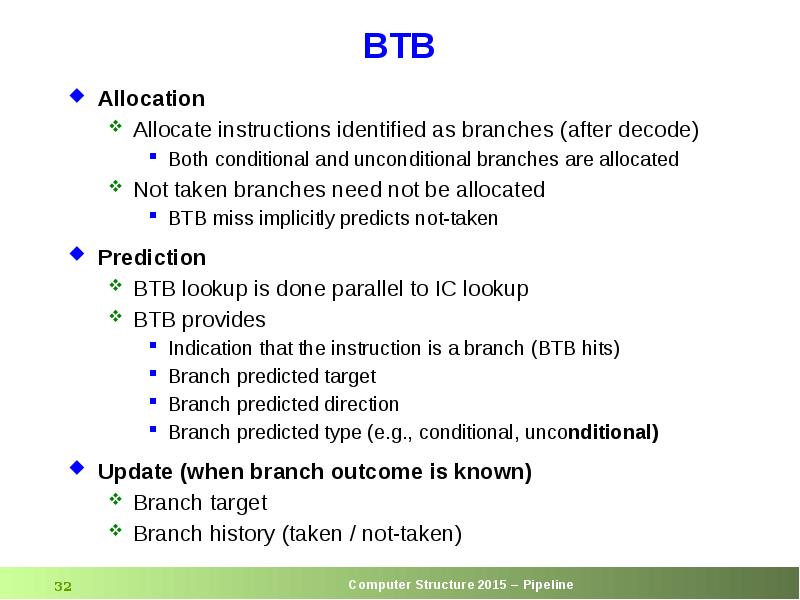

- 32. BTB Allocation Allocate instructions identified as branches (after decode) Both conditional

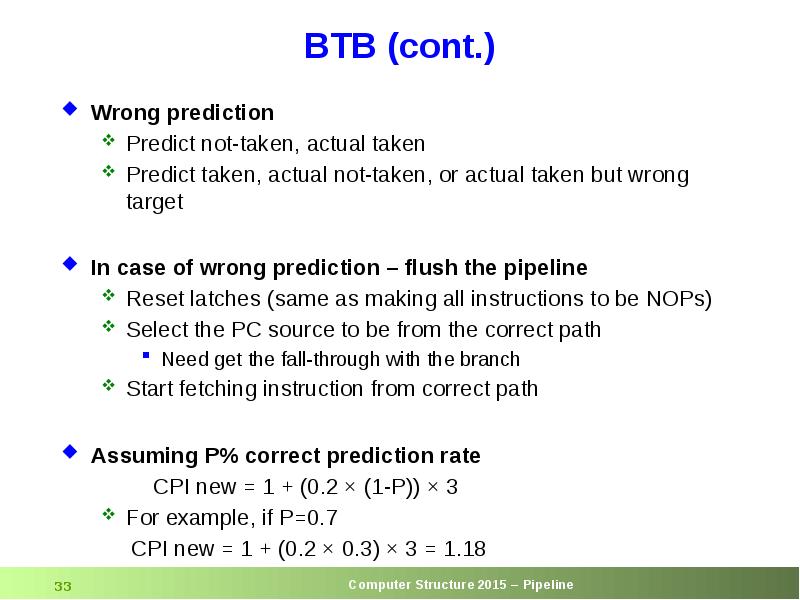

- 33. BTB (cont.) Wrong prediction Predict not-taken, actual taken Predict taken, actual

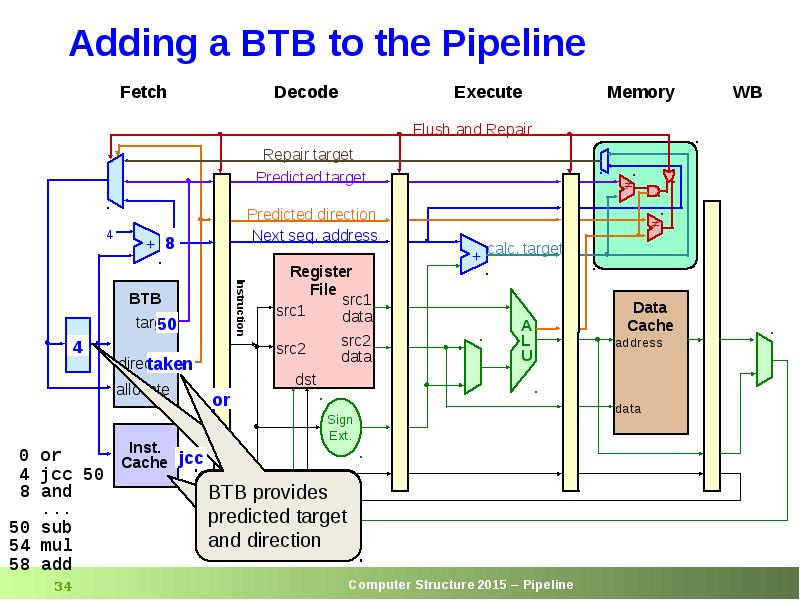

- 34. Adding a BTB to the Pipeline

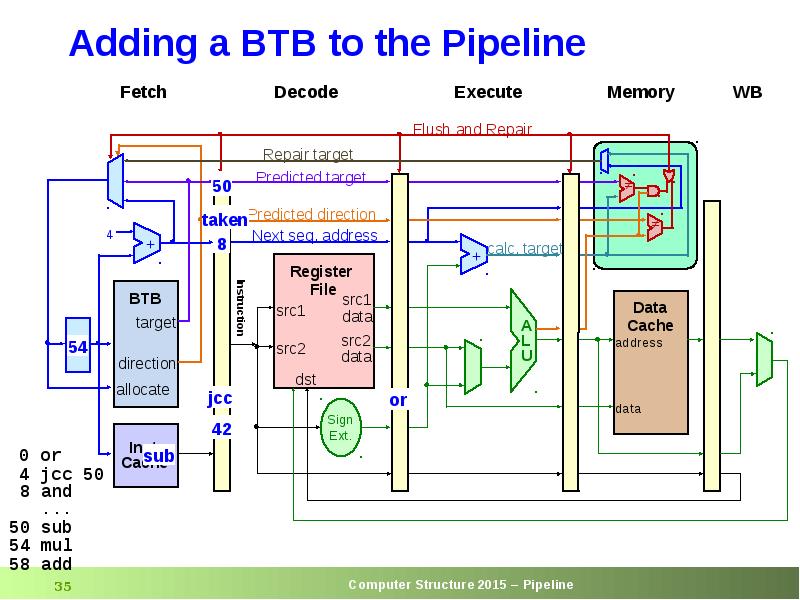

- 35. Adding a BTB to the Pipeline

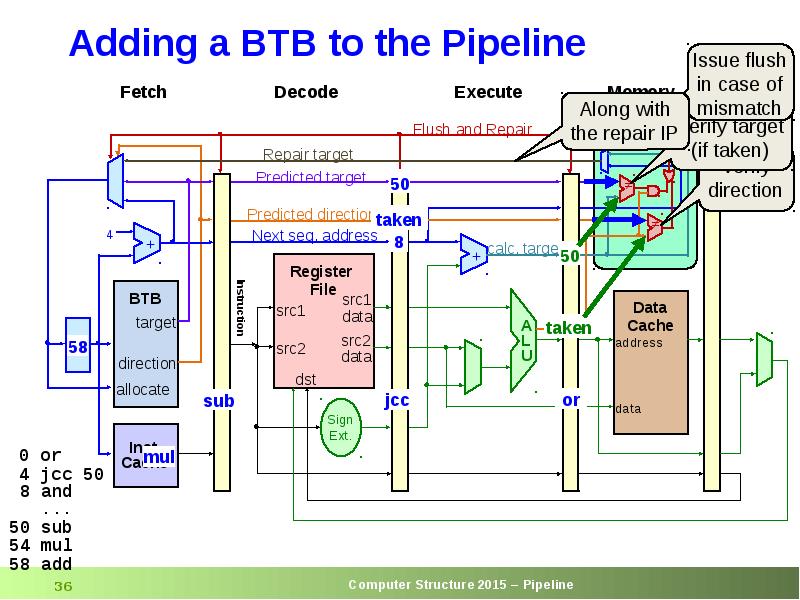

- 36. Adding a BTB to the Pipeline

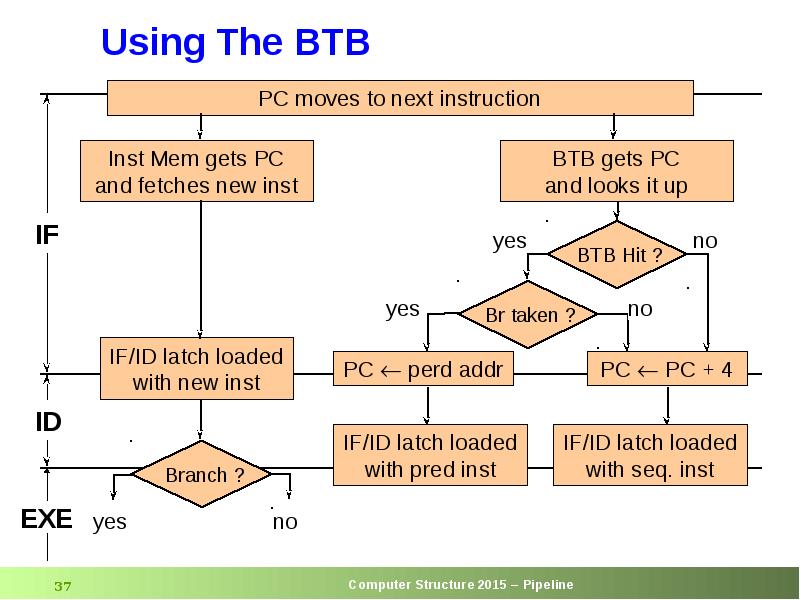

- 37. Using The BTB

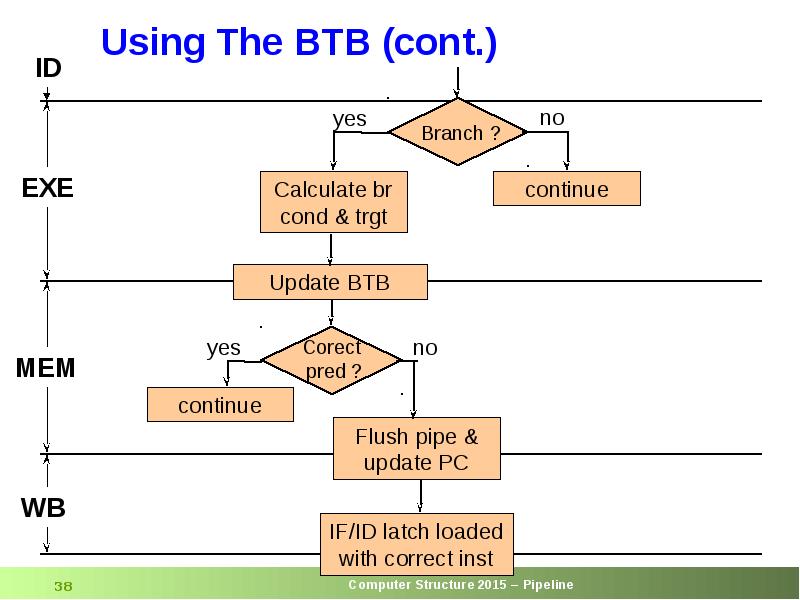

- 38. Using The BTB (cont.)

- 39. Backup

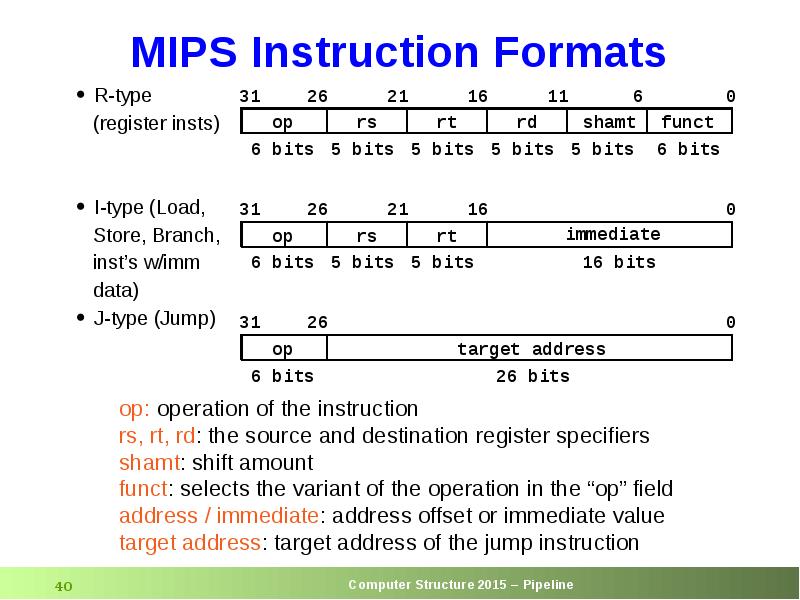

- 40. MIPS Instruction Formats

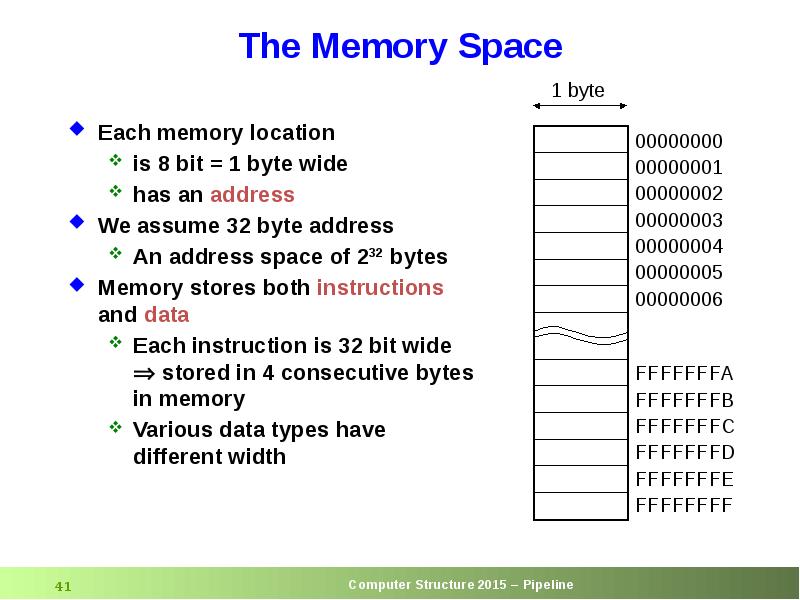

- 41. The Memory Space Each memory location is 8 bit =

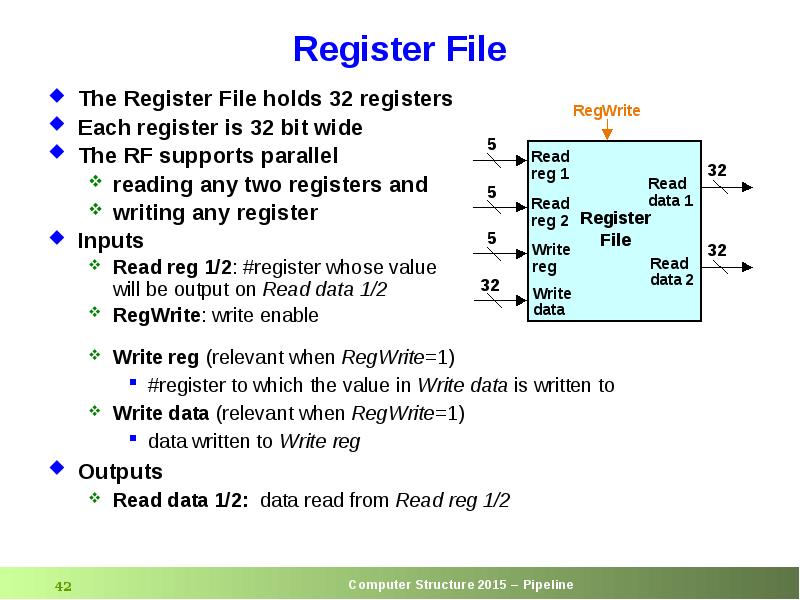

- 42. Register File The Register File holds 32 registers Each register is

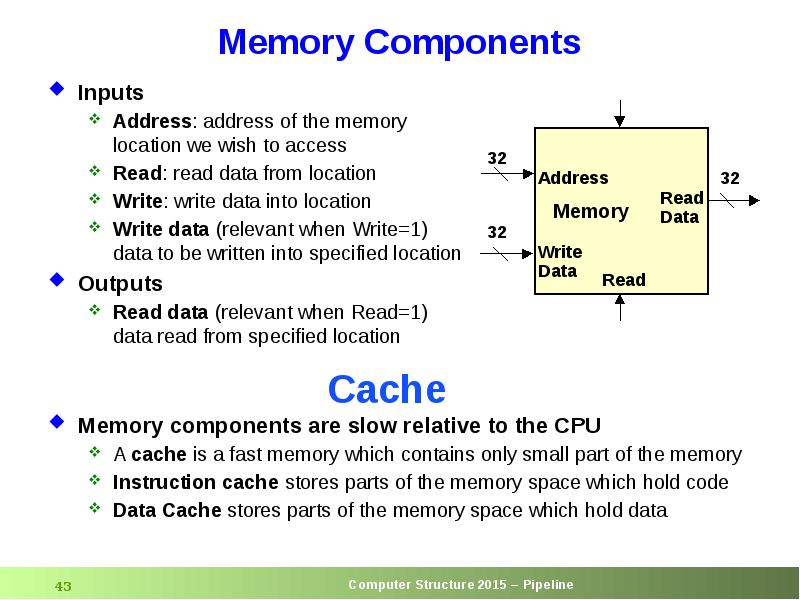

- 43. Memory Components Inputs Address: address of the memory location we wish

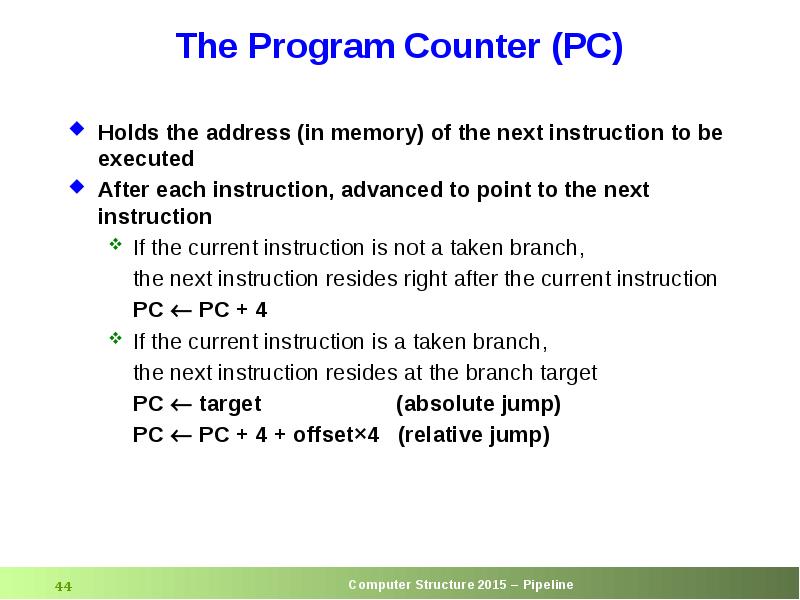

- 44. The Program Counter (PC) Holds the address (in memory) of the

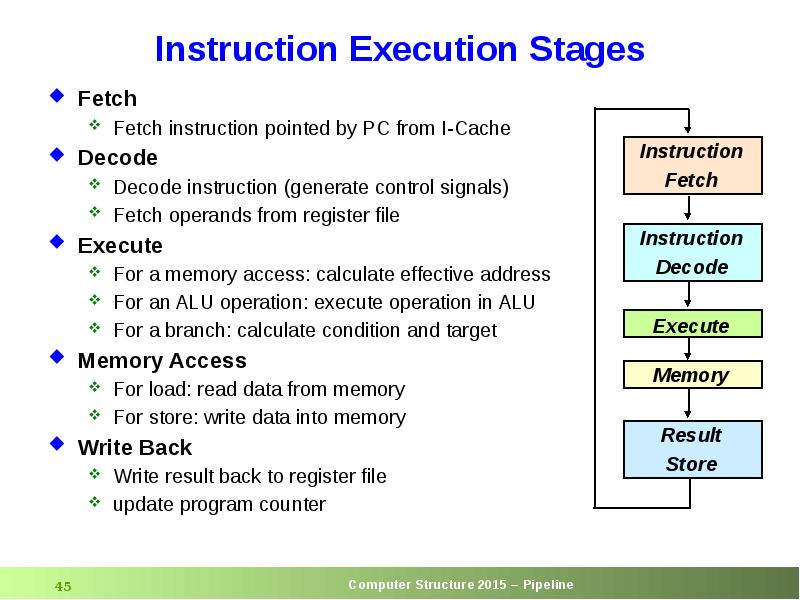

- 45. Instruction Execution Stages Fetch Fetch instruction pointed by PC from I-Cache

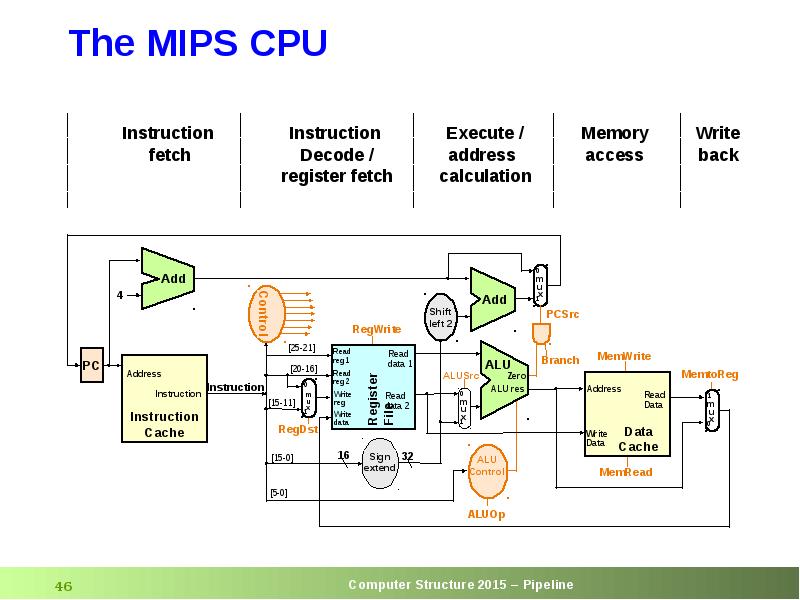

- 46. The MIPS CPU

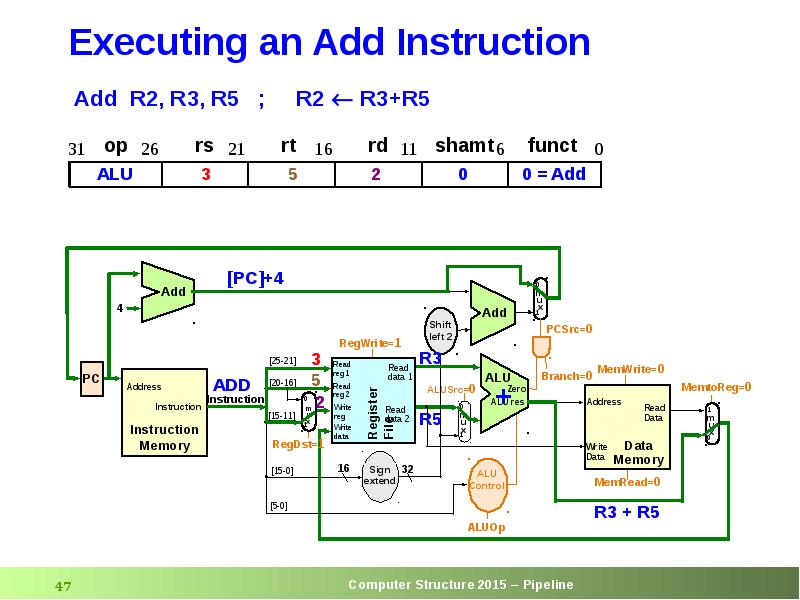

- 47. Executing an Add Instruction

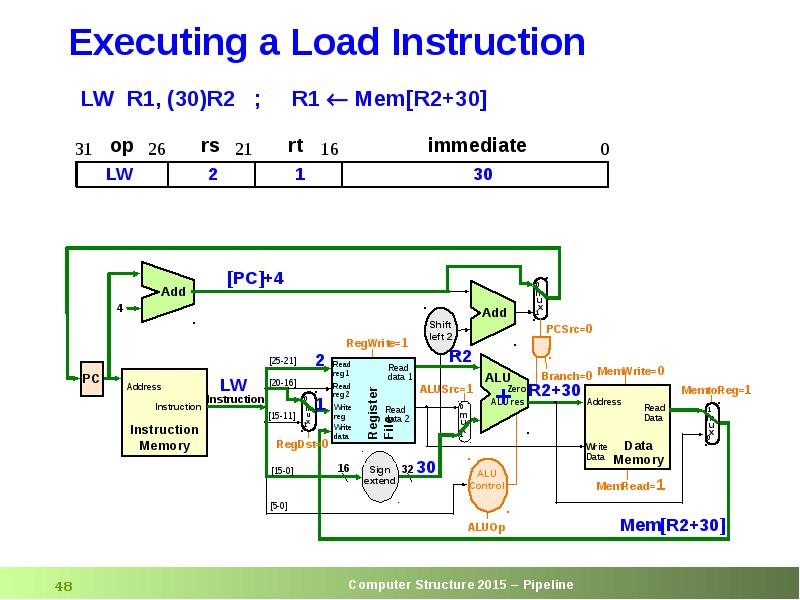

- 48. Executing a Load Instruction

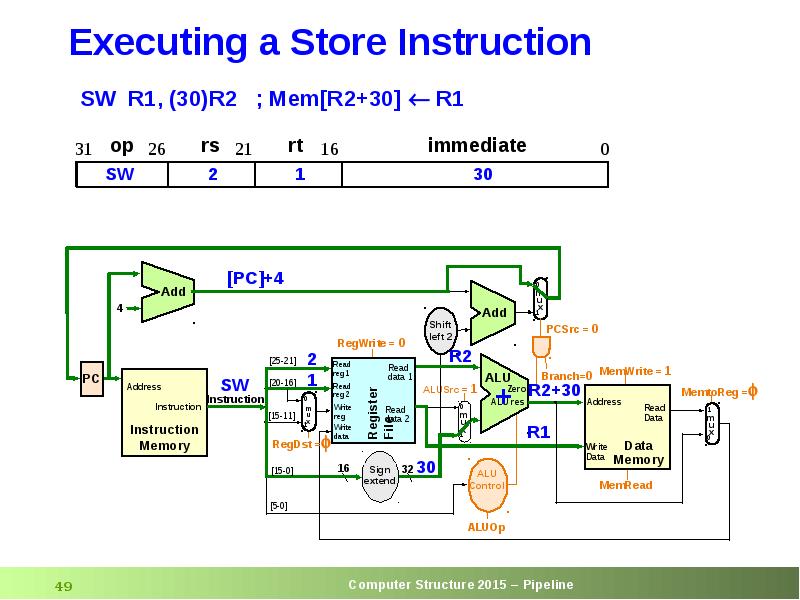

- 49. Executing a Store Instruction

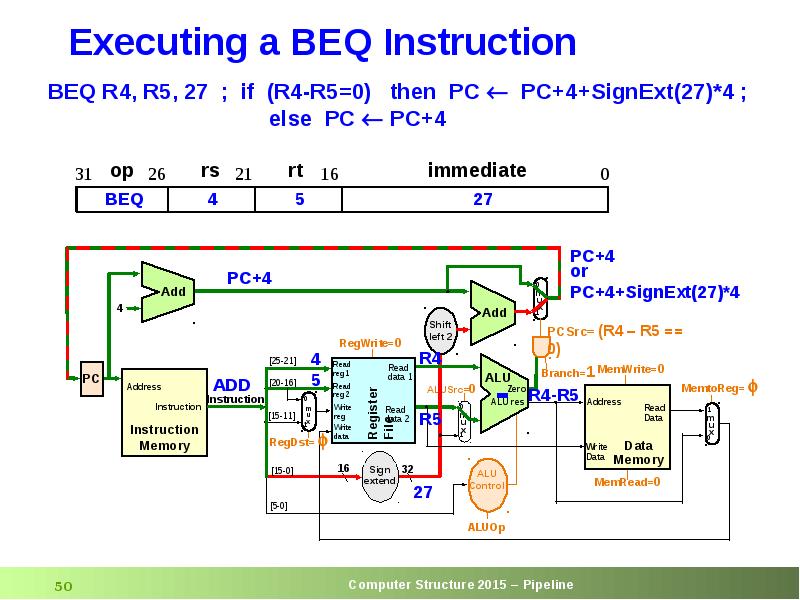

- 50. Executing a BEQ Instruction

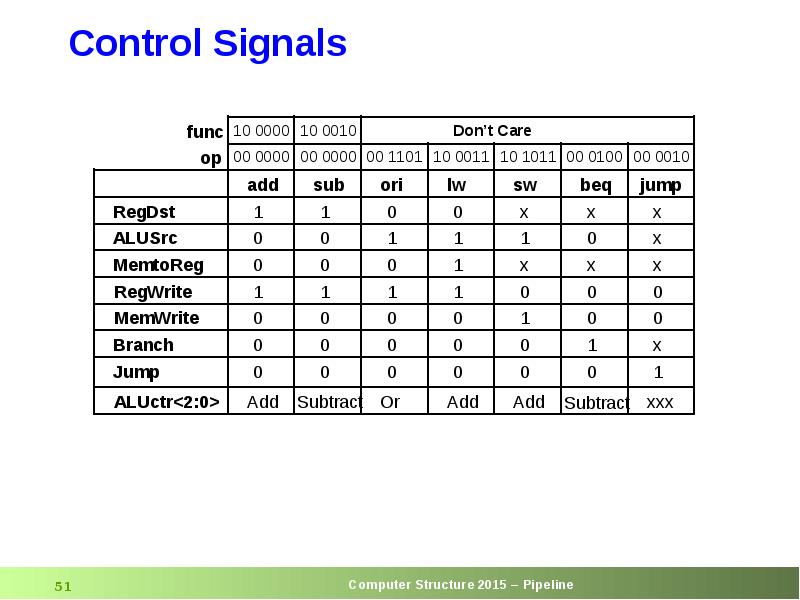

- 51. Control Signals

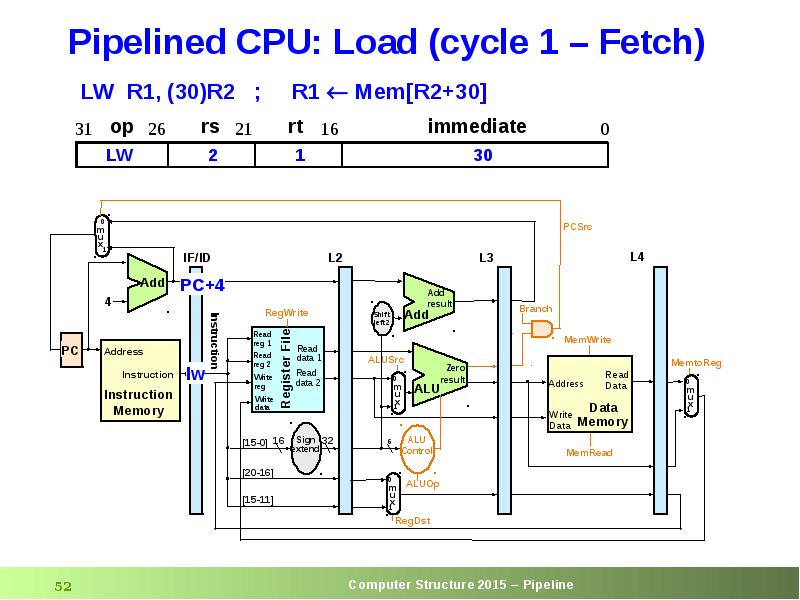

- 52. Pipelined CPU: Load (cycle 1 – Fetch)

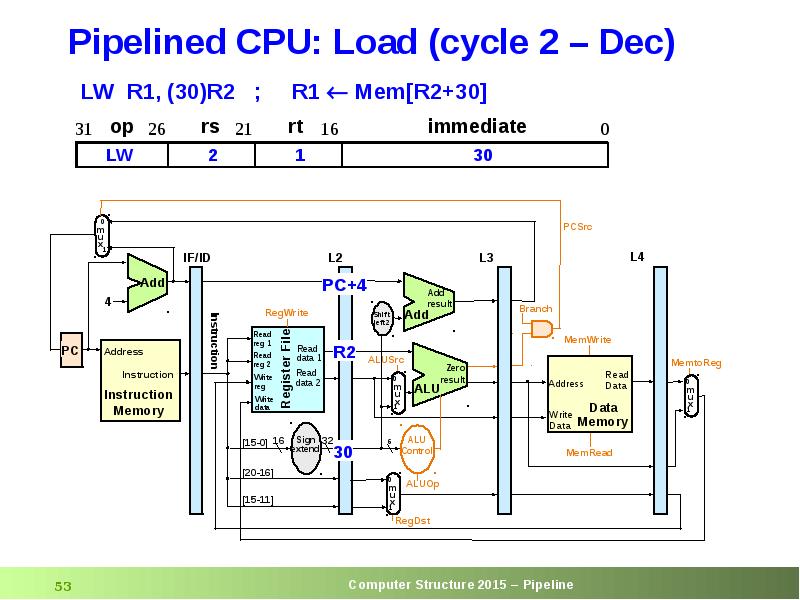

- 53. Pipelined CPU: Load (cycle 2 – Dec)

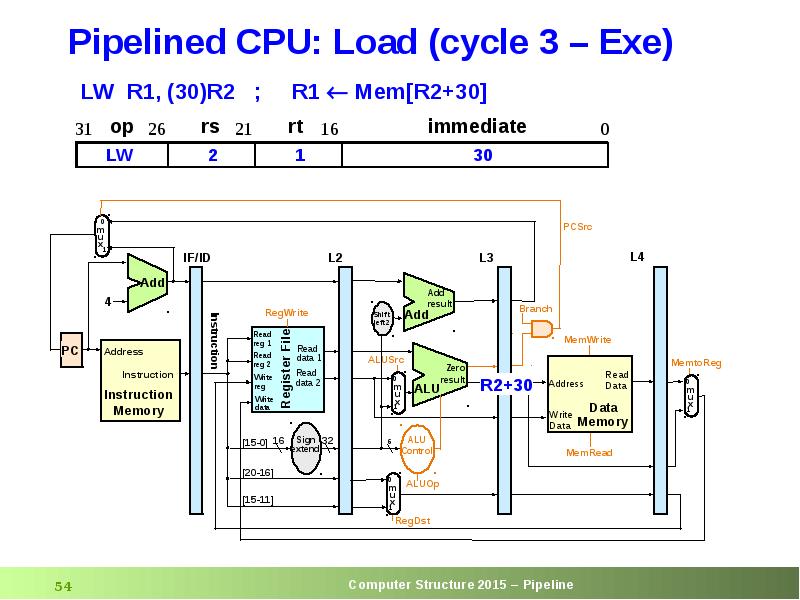

- 54. Pipelined CPU: Load (cycle 3 – Exe)

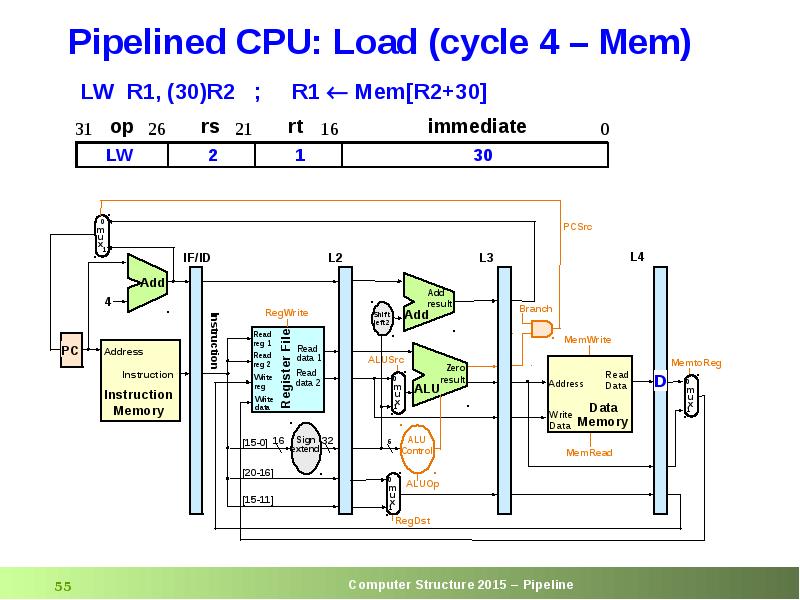

- 55. Pipelined CPU: Load (cycle 4 – Mem)

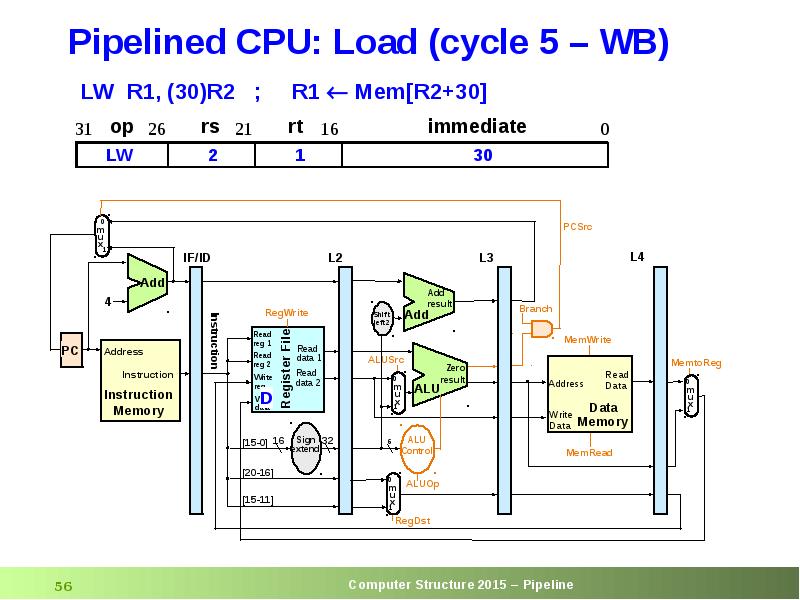

- 56. Pipelined CPU: Load (cycle 5 – WB)

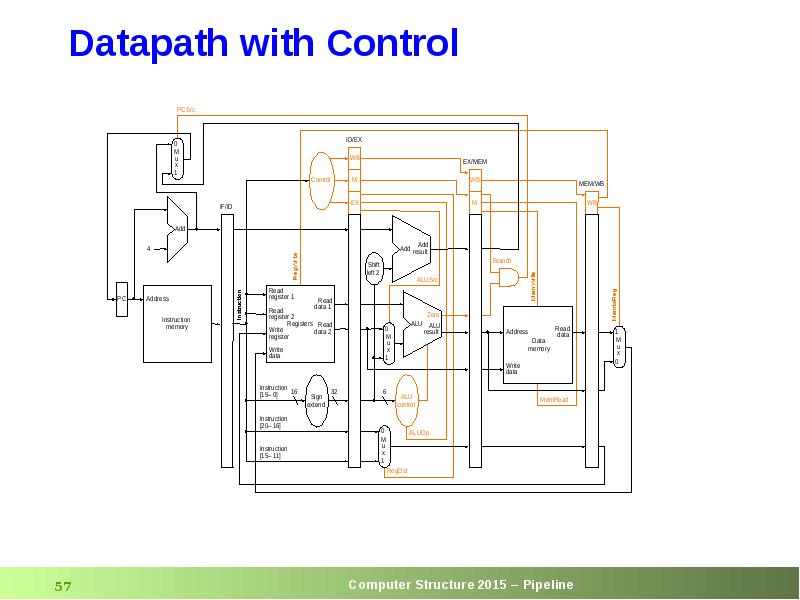

- 57. Datapath with Control

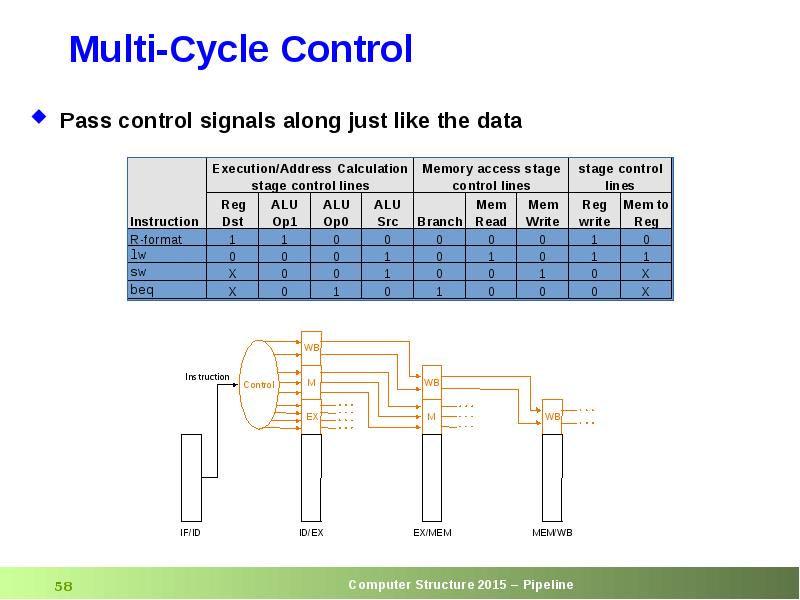

- 58. Multi-Cycle Control

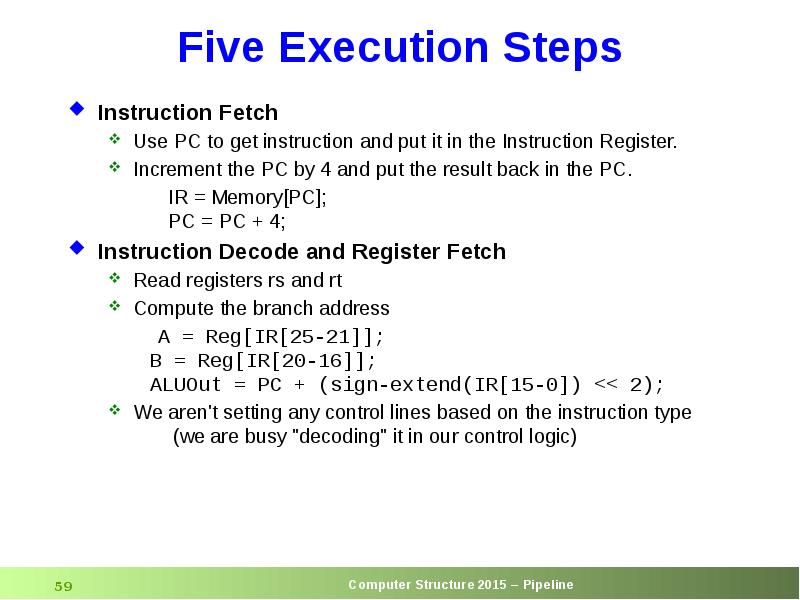

- 59. Five Execution Steps Instruction Fetch Use PC to get instruction and

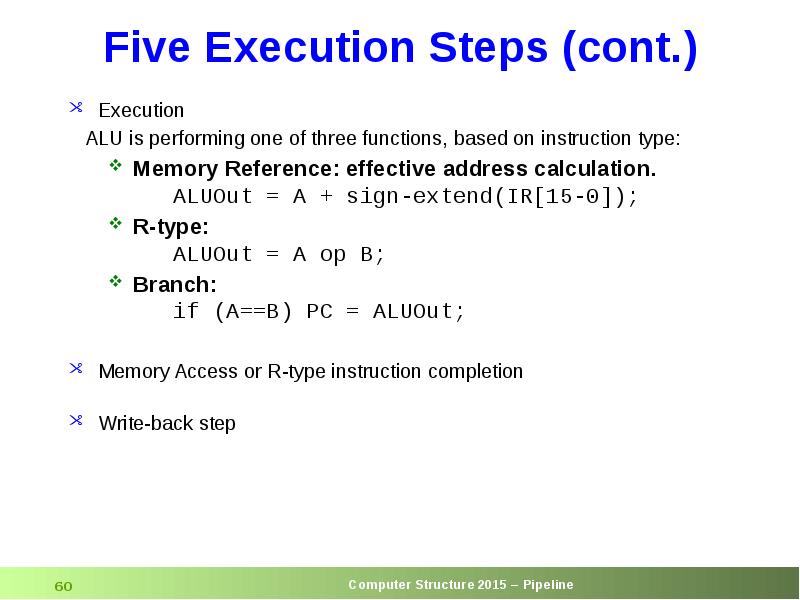

- 60. Five Execution Steps (cont.) Execution ALU is performing one of

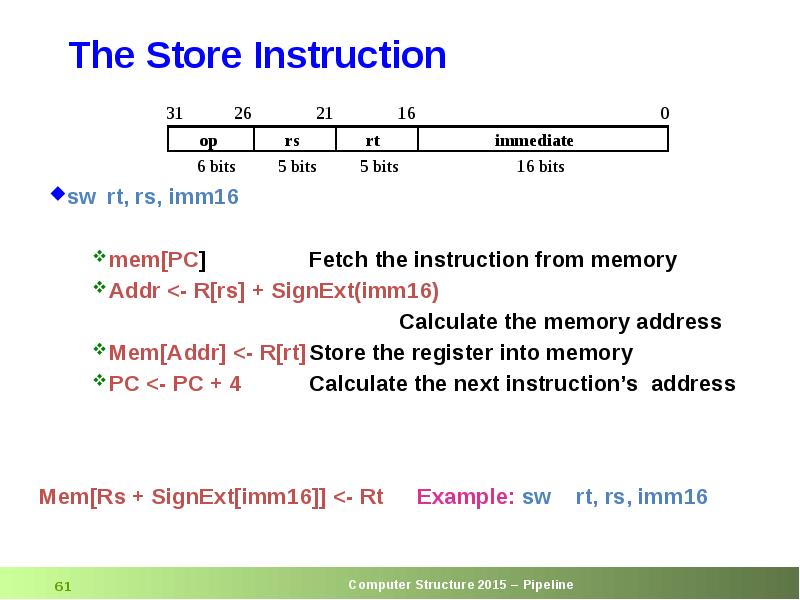

- 61. The Store Instruction

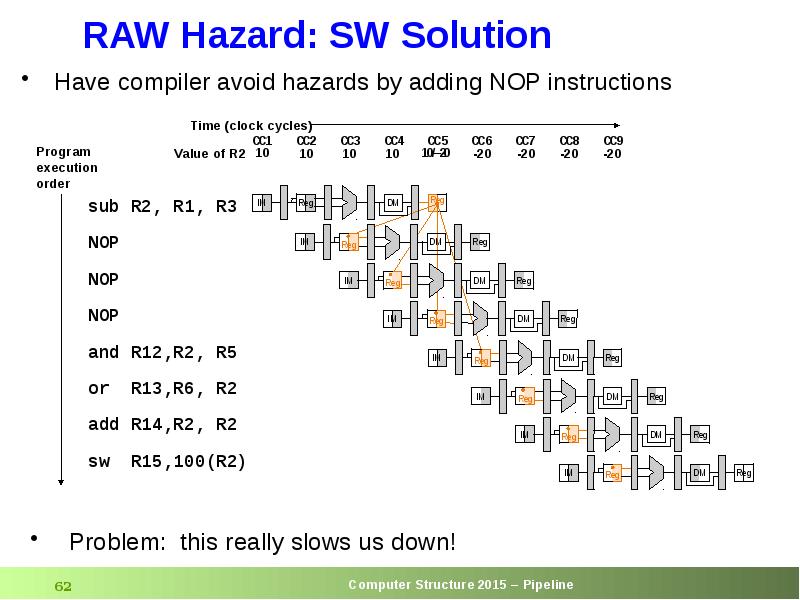

- 62. RAW Hazard: SW Solution

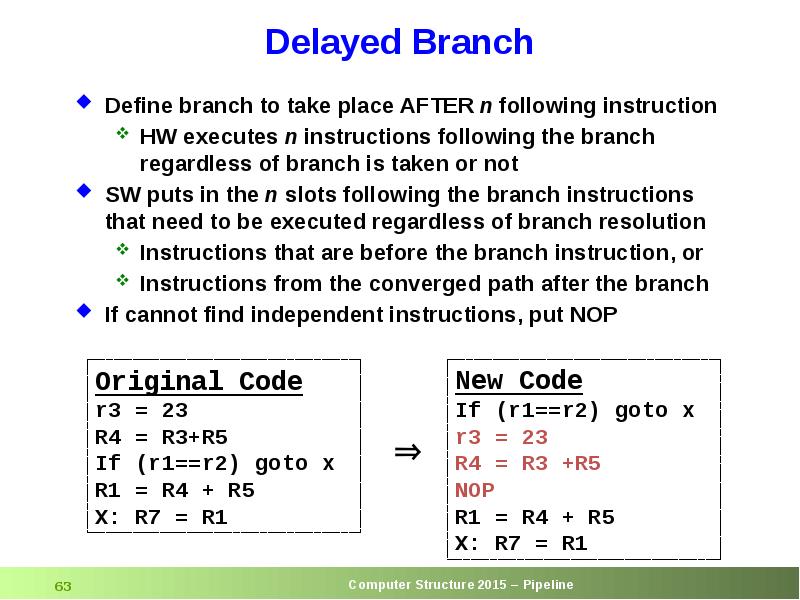

- 63. Delayed Branch Define branch to take place AFTER n following instruction

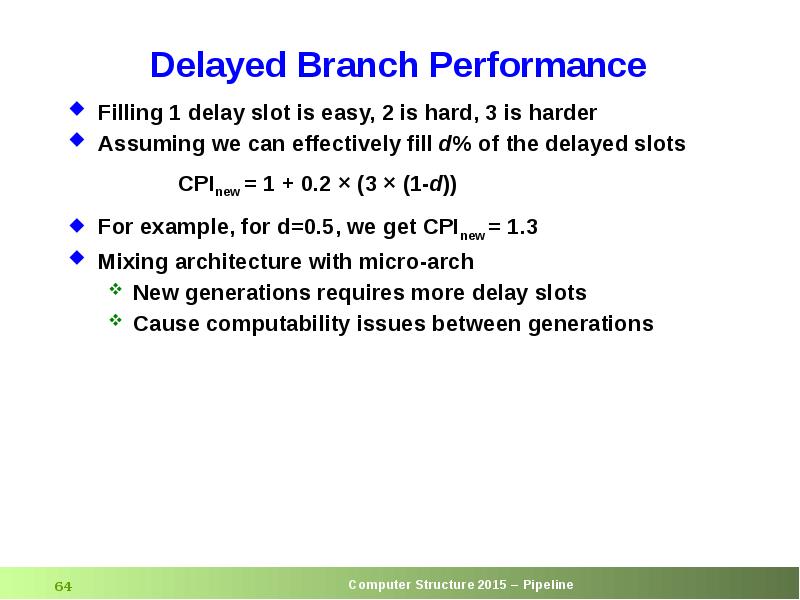

- 64. Delayed Branch Performance Filling 1 delay slot is easy, 2 is

- 65. Скачать презентацию

Слайды и текст этой презентации

Похожие презентации