Logistic regression презентация

Содержание

- 2. Logistic Regression is a statistical method of classification of objects. In

- 3. A doctor classifies the tumor as malignant or benign. A

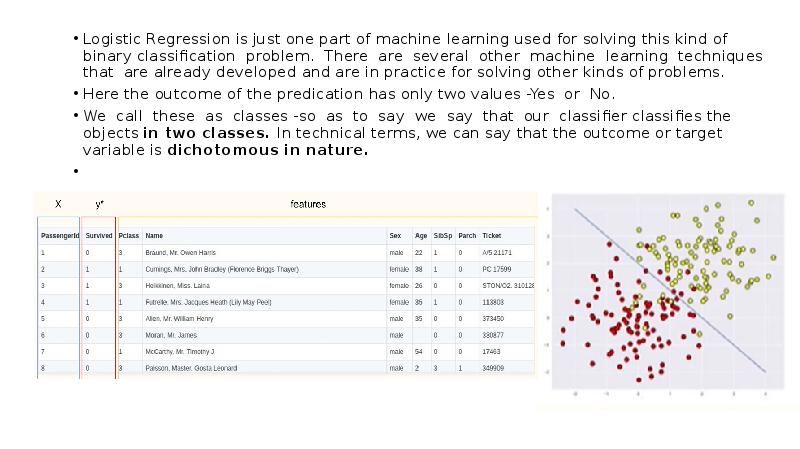

- 4. Logistic Regression is just one part of machine learning used for

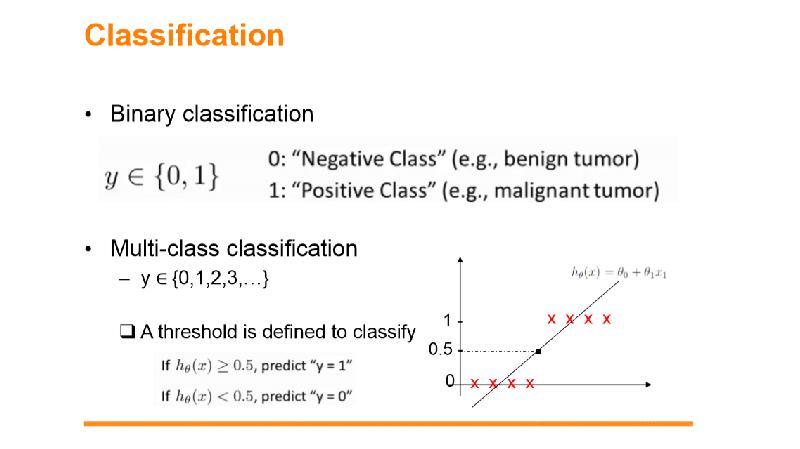

- 5. There are other classification problems in which the output may be

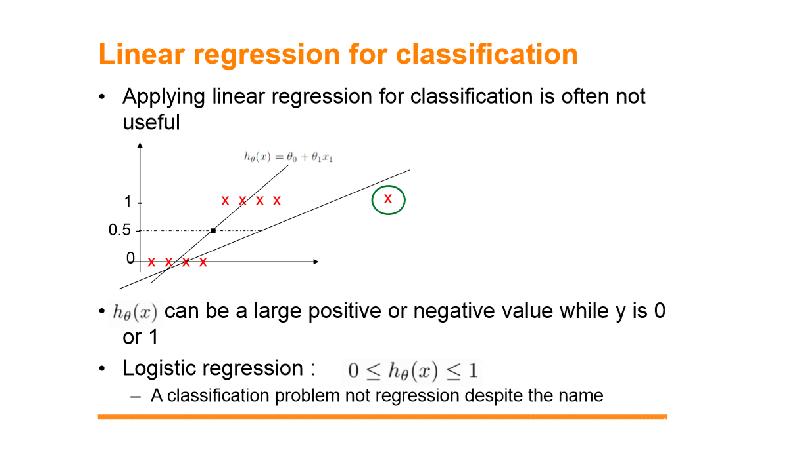

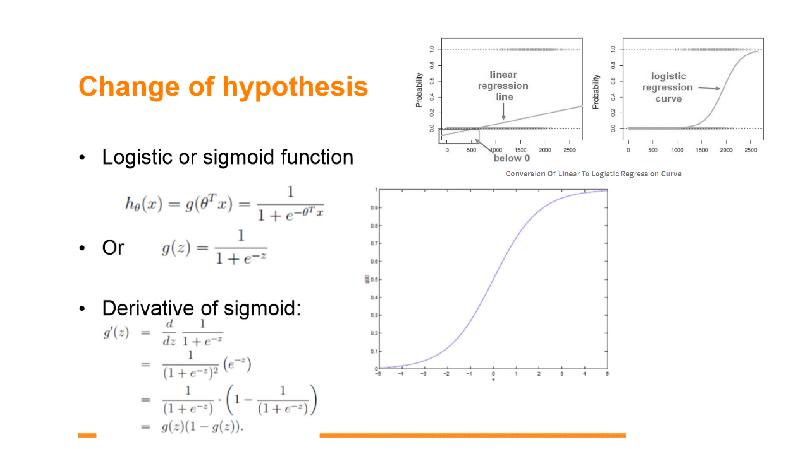

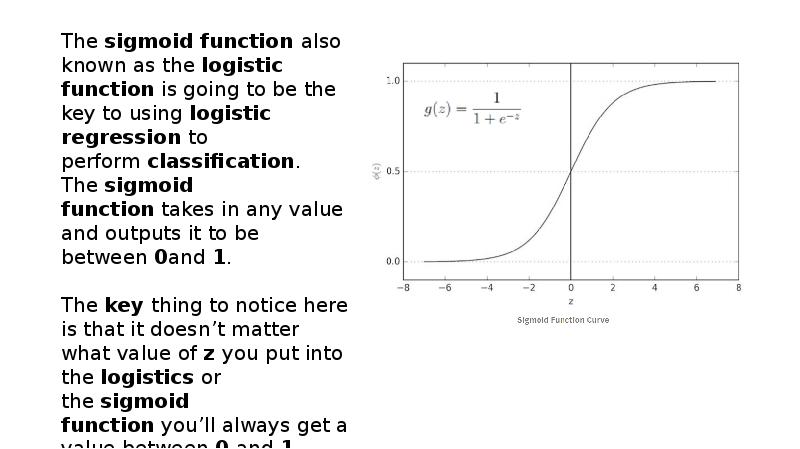

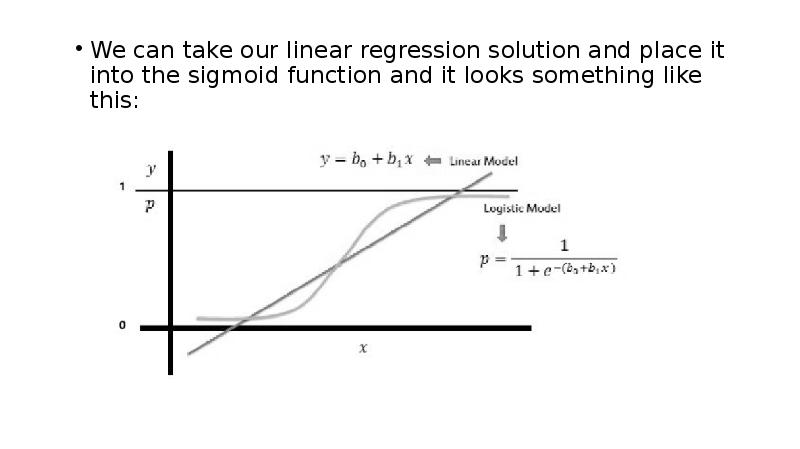

- 10. We can take our linear regression solution and place it into

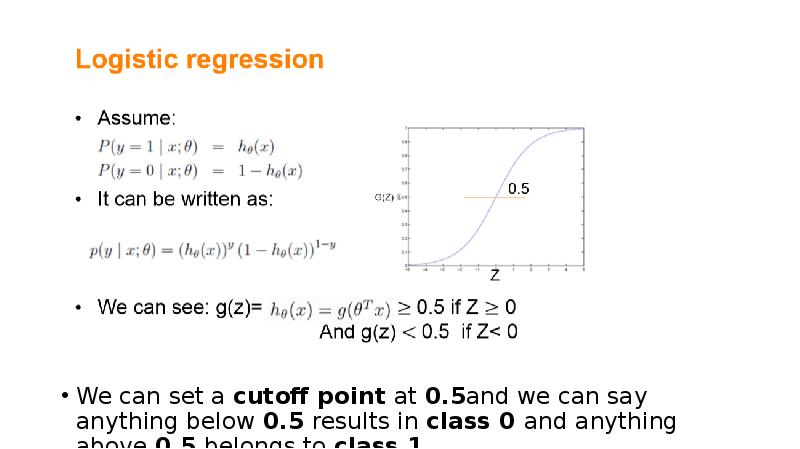

- 11. We can set a cutoff point at 0.5and we can say anything below 0.5 results

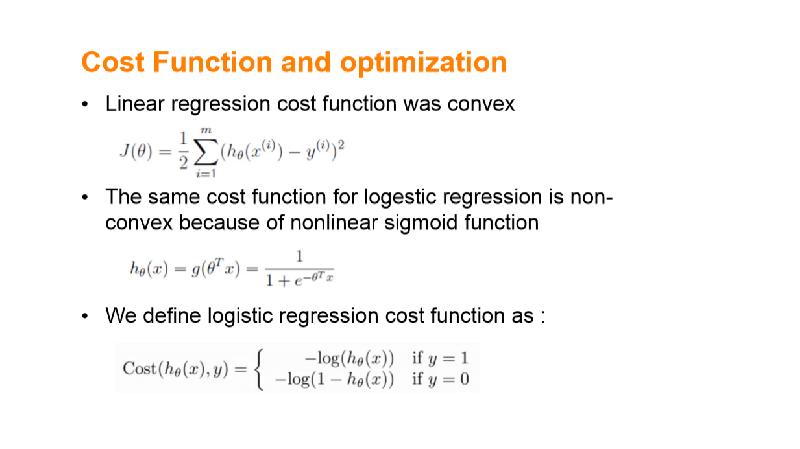

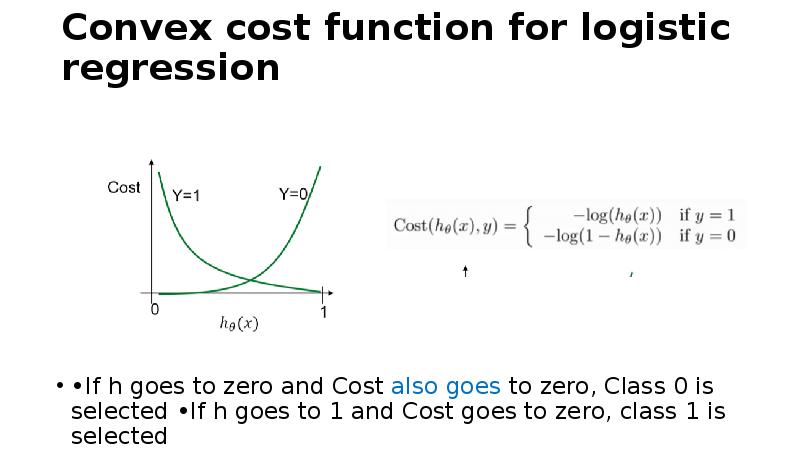

- 13. Convex cost function for logistic regression •If h goes to

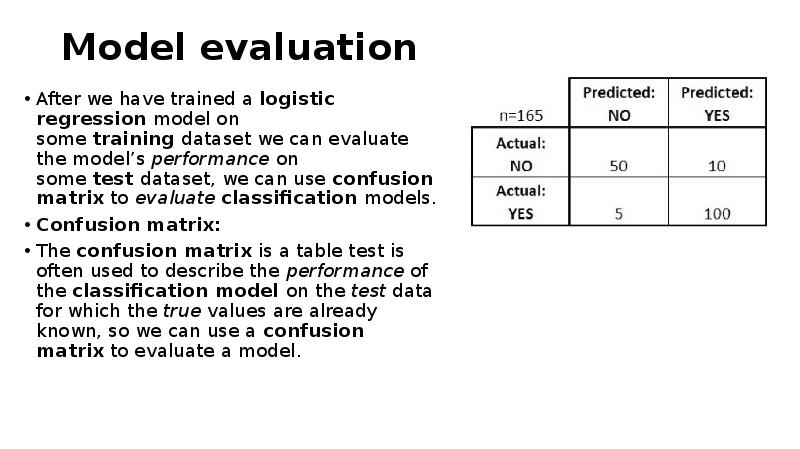

- 14. Model evaluation After we have trained a logistic regression model on some training dataset

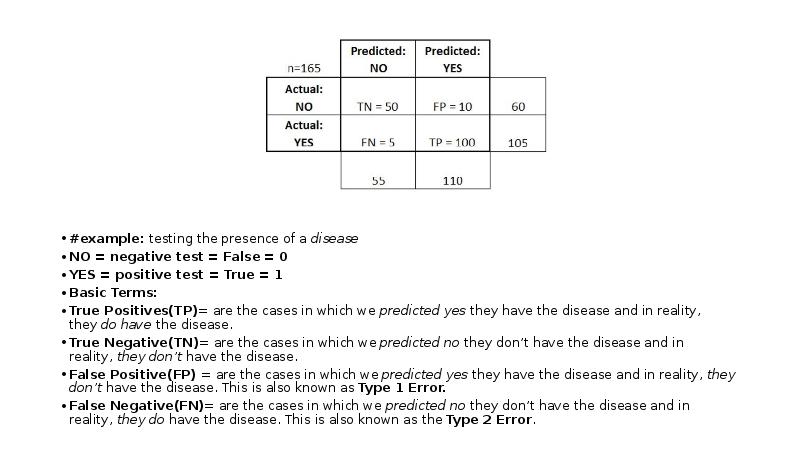

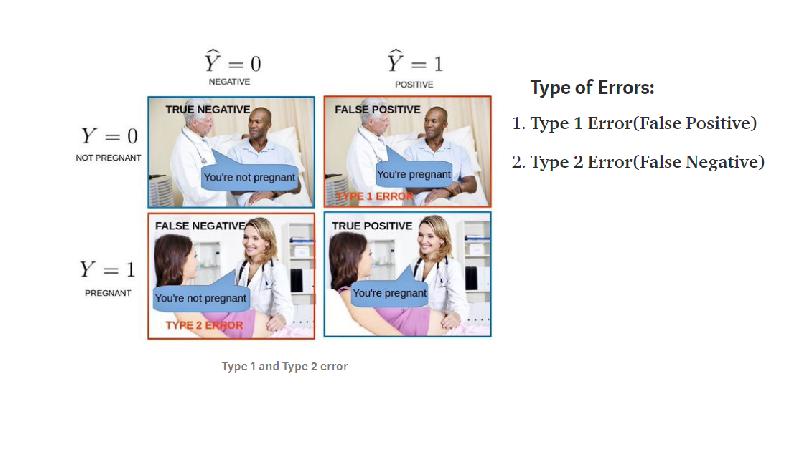

- 15. #example: testing the presence of a disease NO = negative test = False

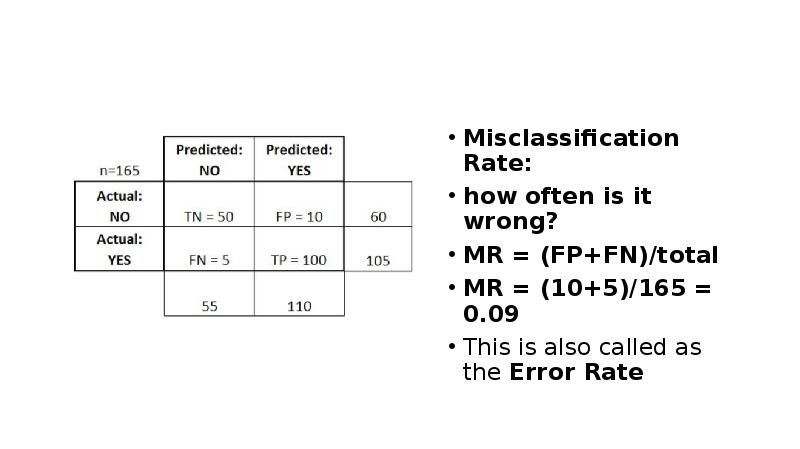

- 16. Misclassification Rate: how often is it wrong? MR = (FP+FN)/total MR

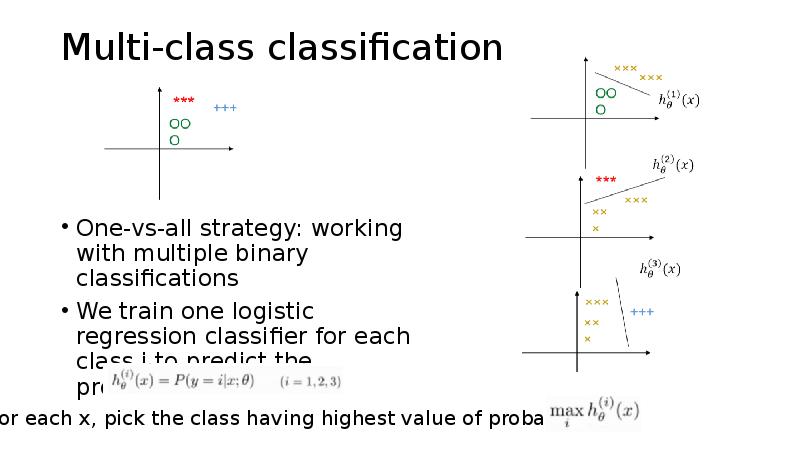

- 18. Multi-class classification One-vs-all strategy: working with multiple binary classifications

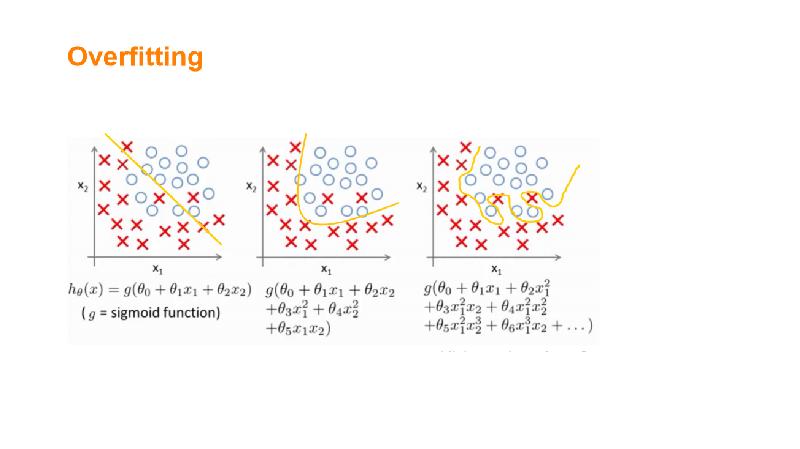

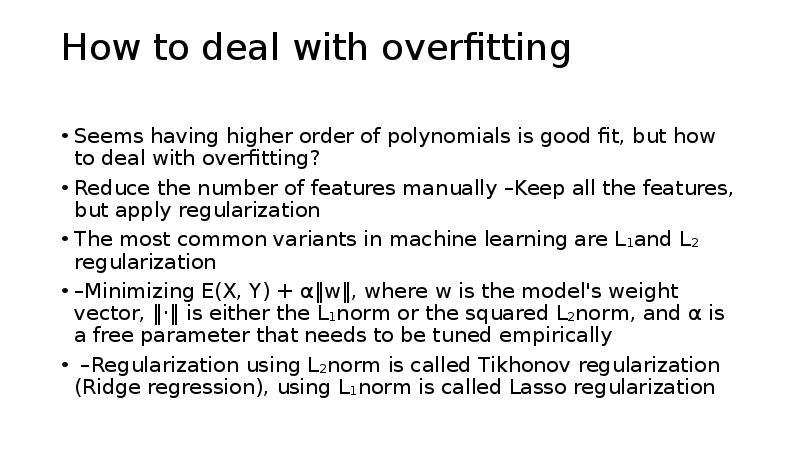

- 20. How to deal with overfitting Seems having higher order of

- 21. Advantages: it doesn’t require high computational power is easily interpretable is used

- 22. Disadvantages: while working with Logistic regression you are not able to handle

- 23. https://www.youtube.com/watch?v=yIYKR4sgzI8

- 24. AT HOME https://www.youtube.com/watch?v=zAULhNrnuL4 https://www.youtube.com/watch?v=ckkiG-SDuV8 https://www.youtube.com/watch?v=NmjT1_nClzg https://www.youtube.com/watch?v=gcr3qy0SdGQ https://www.youtube.com/watch?v=gcr3qy0SdGQ https://www.youtube.com/watch?v=scVUuaLmb9o

- 25. Скачать презентацию

Слайды и текст этой презентации

Похожие презентации