Task Parallel Library. Data Parallelism Patterns презентация

Содержание

- 2. Introduction to Parallel Programming Introduction to Parallel Programming Parallel Loops Parallel

- 3. Introduction to Parallel Programming Introduction to Parallel Programming Parallel Loops Parallel

- 4. Hardware trends predict more cores instead of faster clock speeds Multicore

- 5. Some parallel applications can be written for specific hardware Potential parallelism

- 6. Decomposition Parallel programming patterns aspects

- 7. Tasks are sequential operations that work together to perform a larger

- 8. Tasks that are independent of one another can run in parallel

- 9. Tasks often need to share data Scalable sharing of data

- 10. Understand your problem or application and look for potential parallelism across

- 11. Concurrency is a concept related to multitasking and asynchronous input-output (I/O)

- 12. With parallelism, concurrent threads execute at the same time on multiple

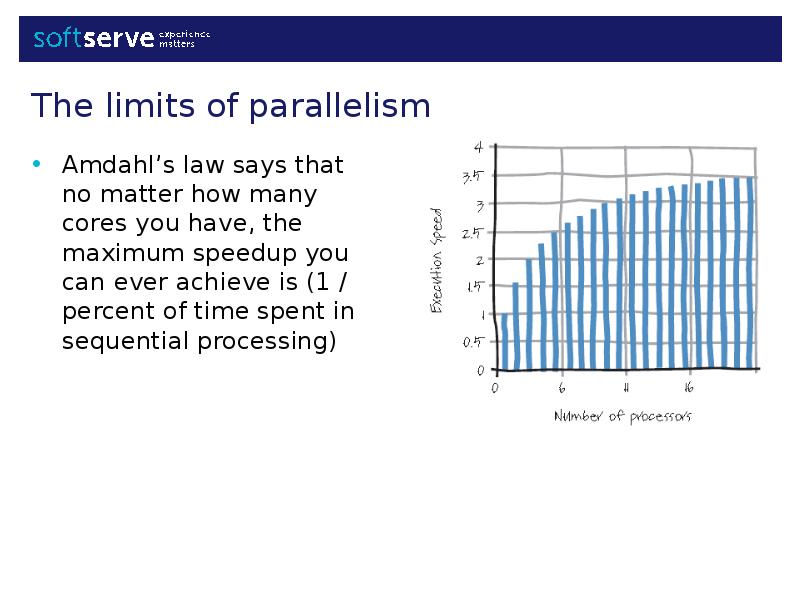

- 13. Amdahl’s law says that no matter how many cores you have,

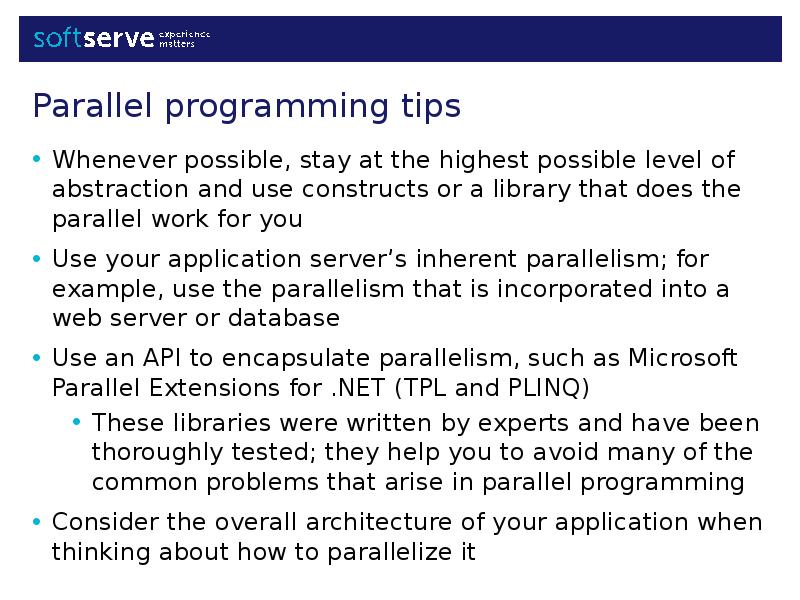

- 14. Whenever possible, stay at the highest possible level of abstraction and

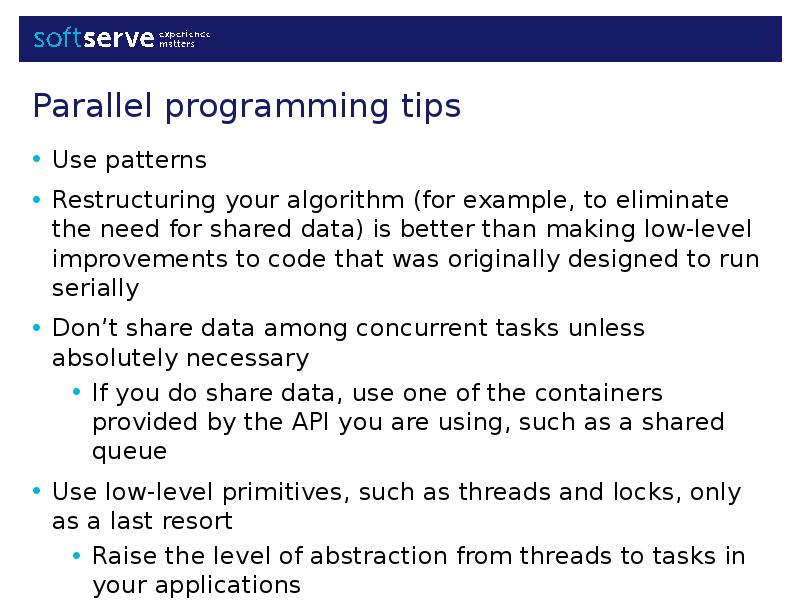

- 15. Use patterns Parallel programming tips

- 16. Based on the .NET Framework 4 Code examples of this presentation

- 17. Introduction to Parallel Programming Introduction to Parallel Programming Parallel Loops Parallel

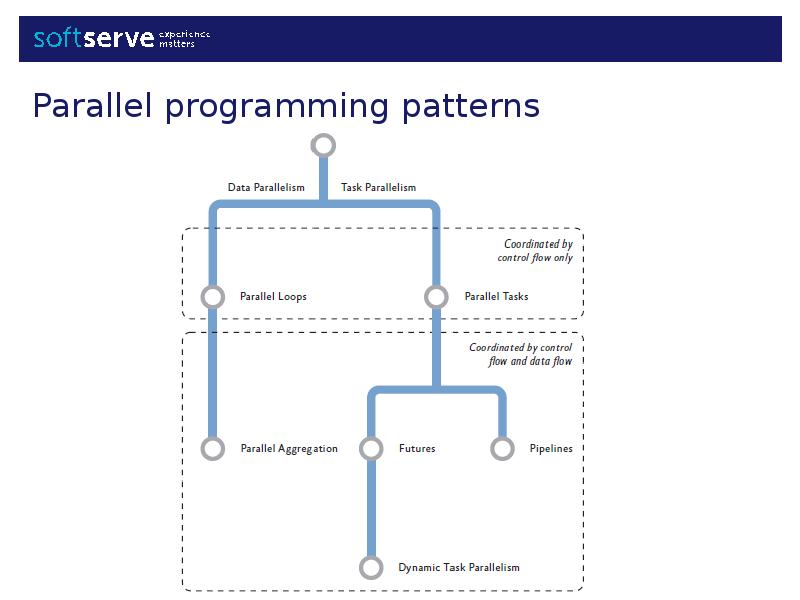

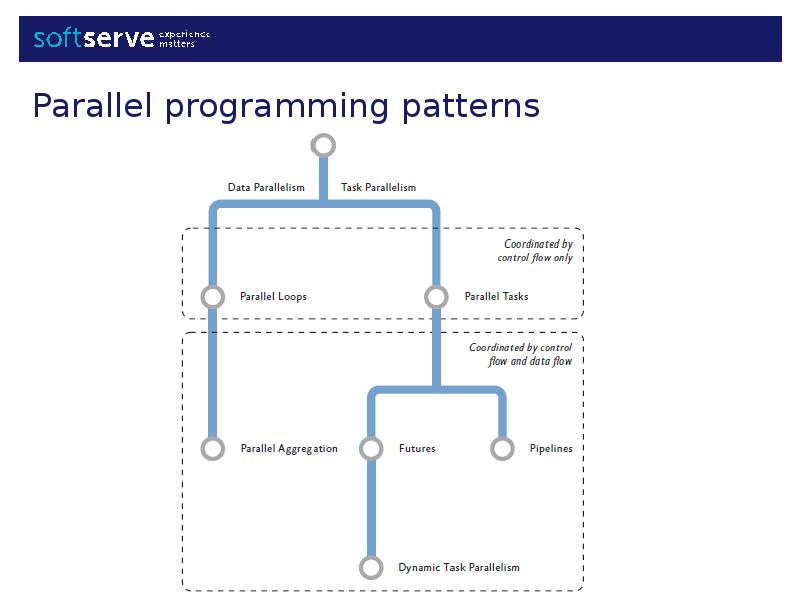

- 18. Parallel programming patterns

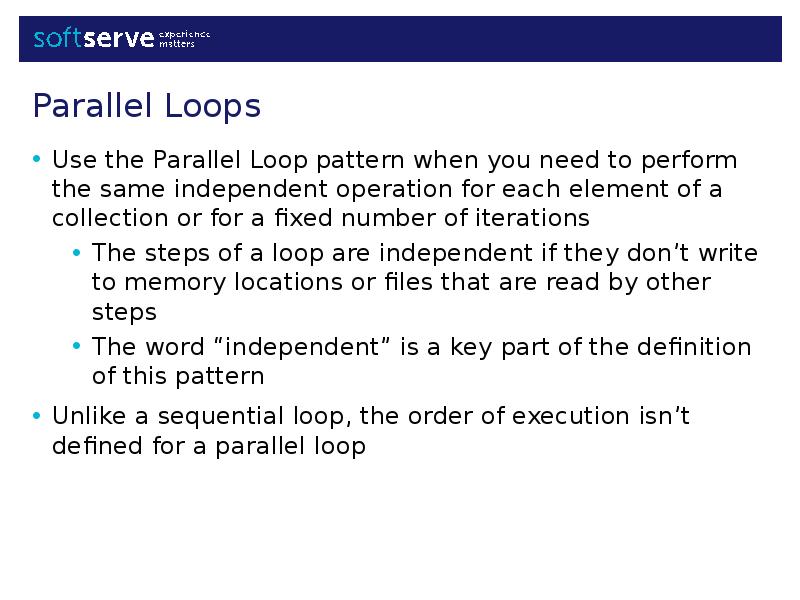

- 19. Use the Parallel Loop pattern when you need to perform the

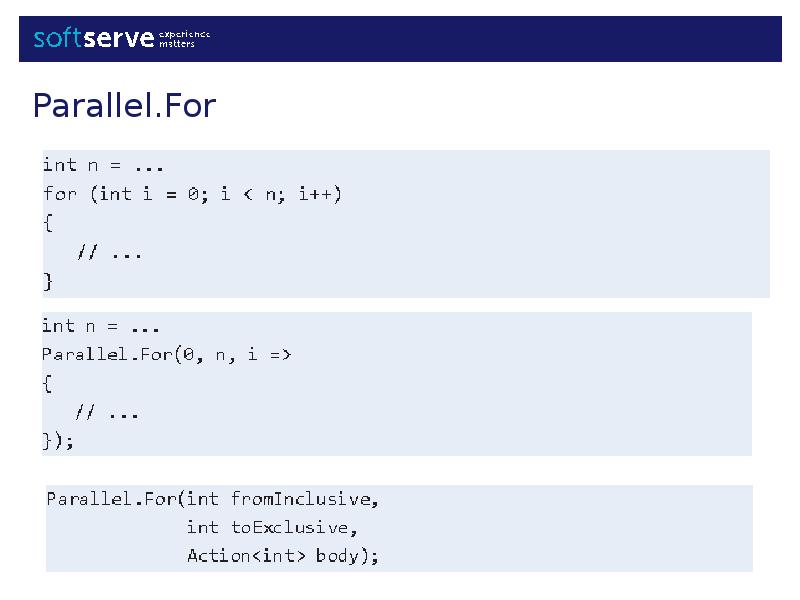

- 20. Parallel.For

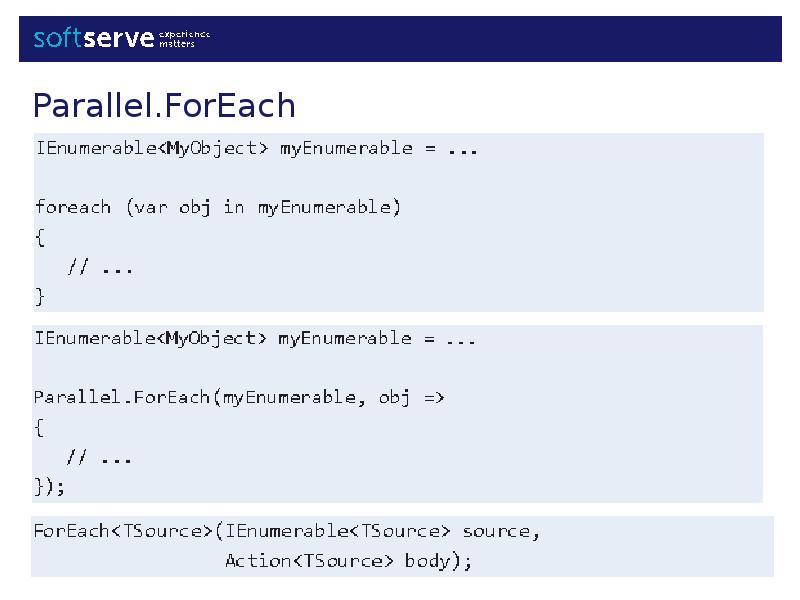

- 21. Parallel.ForEach

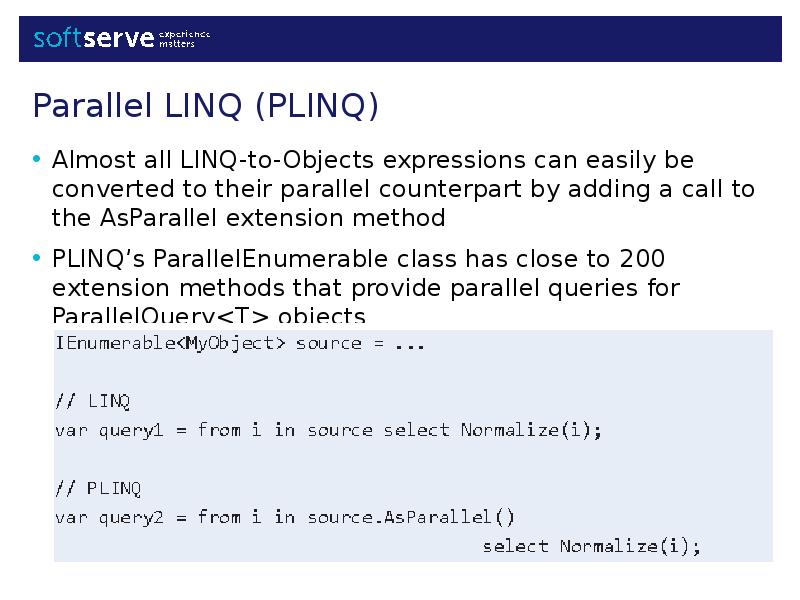

- 22. Almost all LINQ-to-Objects expressions can easily be converted to their parallel

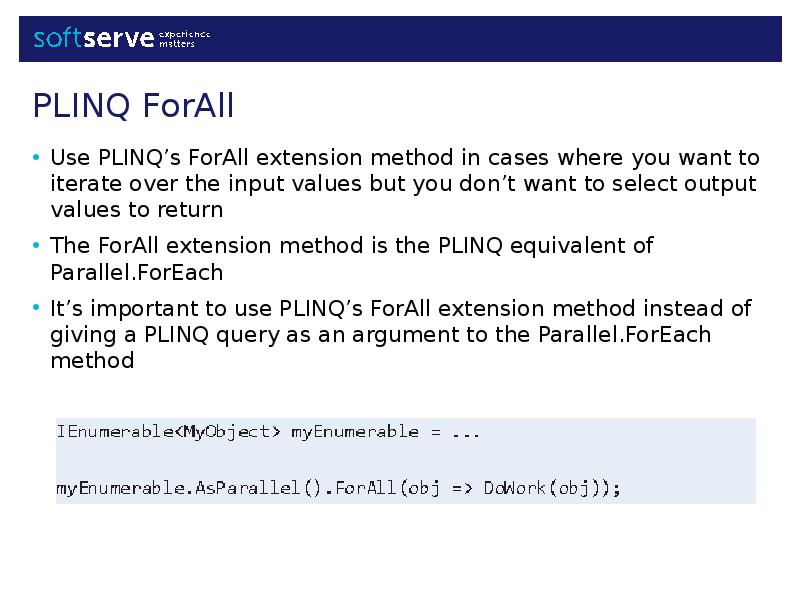

- 23. Use PLINQ’s ForAll extension method in cases where you want to

- 24. The .NET implementation of the Parallel Loop pattern ensures that exceptions

- 25. Parallel loops Parallel loops variations

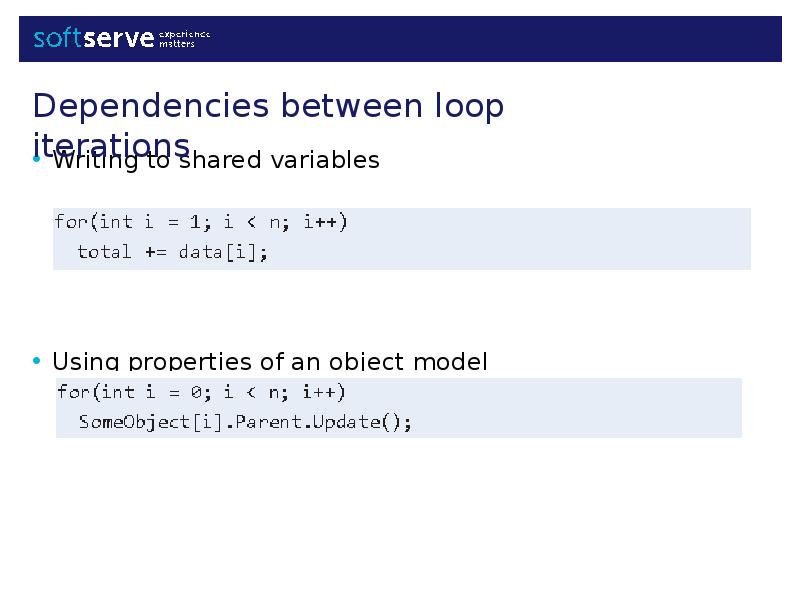

- 26. Writing to shared variables Dependencies between loop iterations

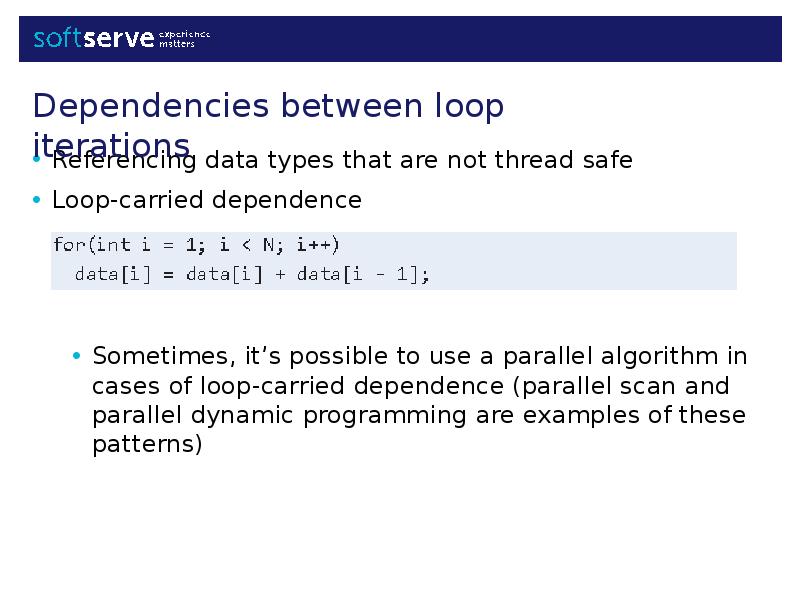

- 27. Referencing data types that are not thread safe Dependencies between loop

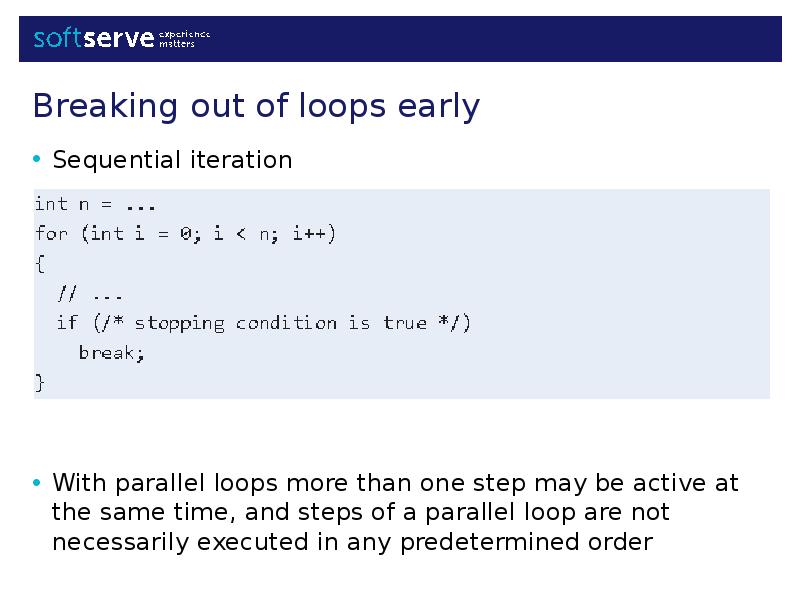

- 28. Sequential iteration Breaking out of loops early

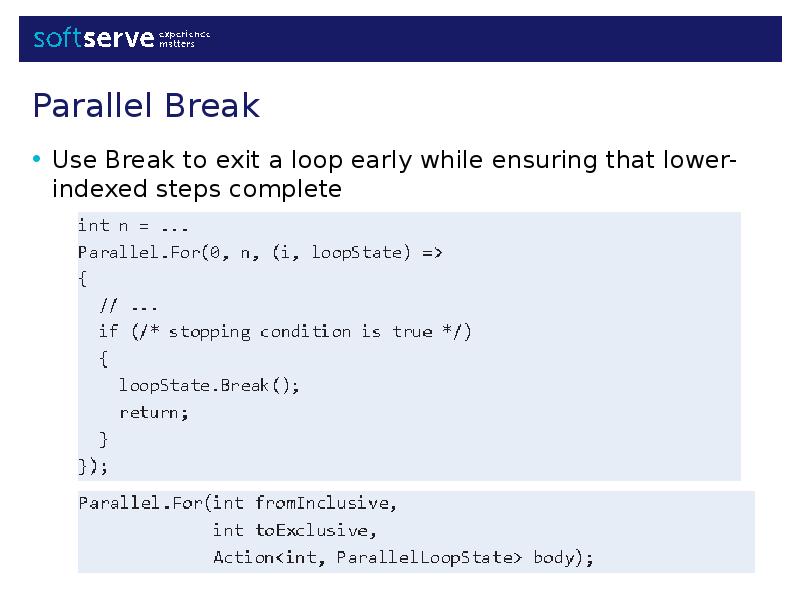

- 29. Use Break to exit a loop early while ensuring that lower-indexed

- 30. Calling Break doesn’t stop other steps that might have already started

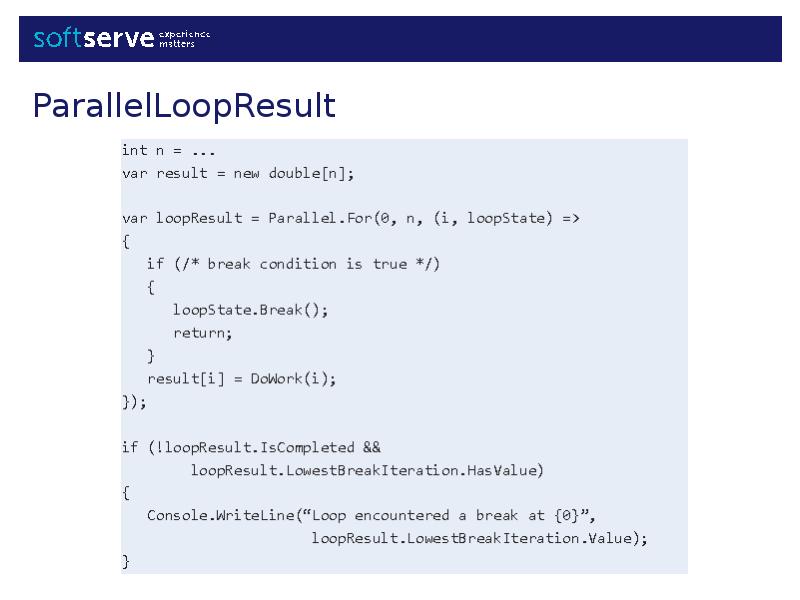

- 31. ParallelLoopResult

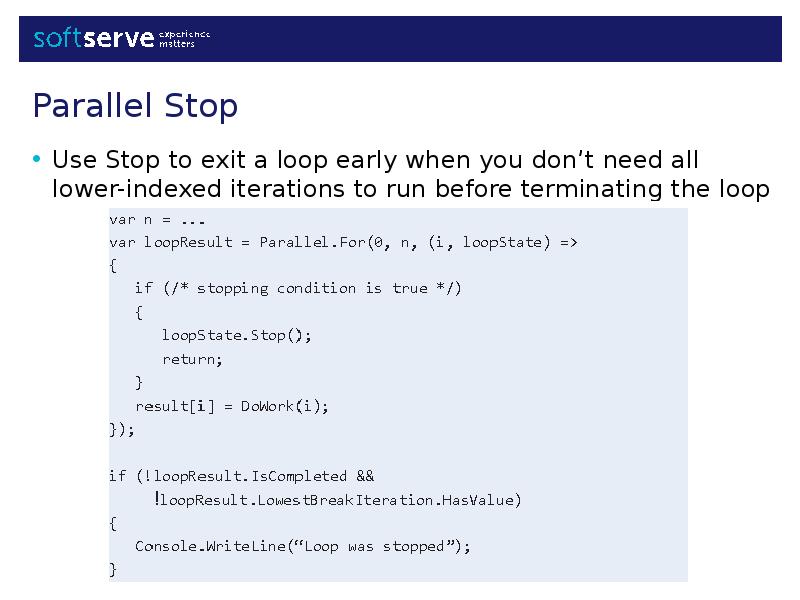

- 32. Use Stop to exit a loop early when you don’t need

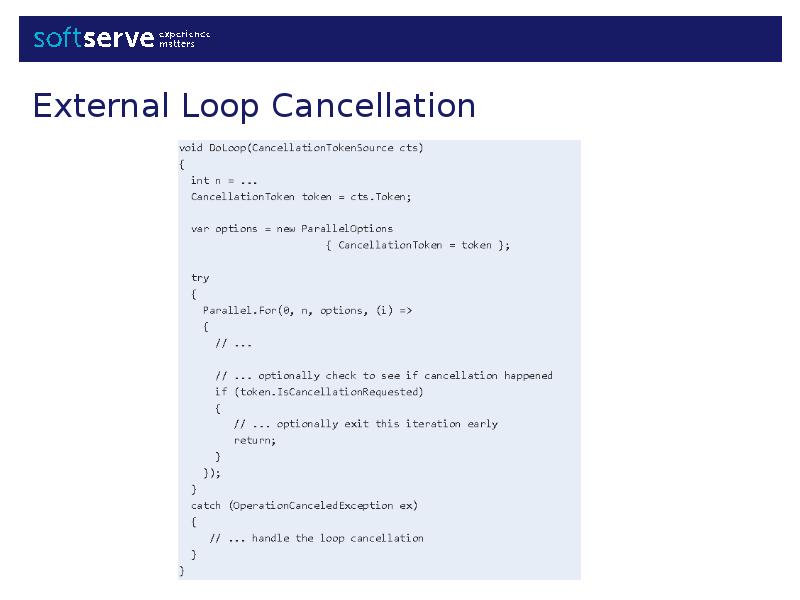

- 33. External Loop Cancellation

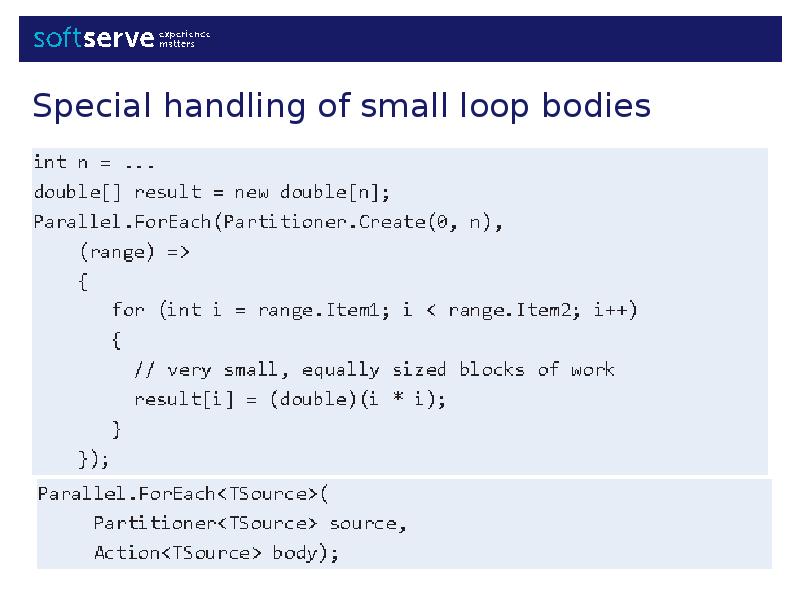

- 34. Special handling of small loop bodies

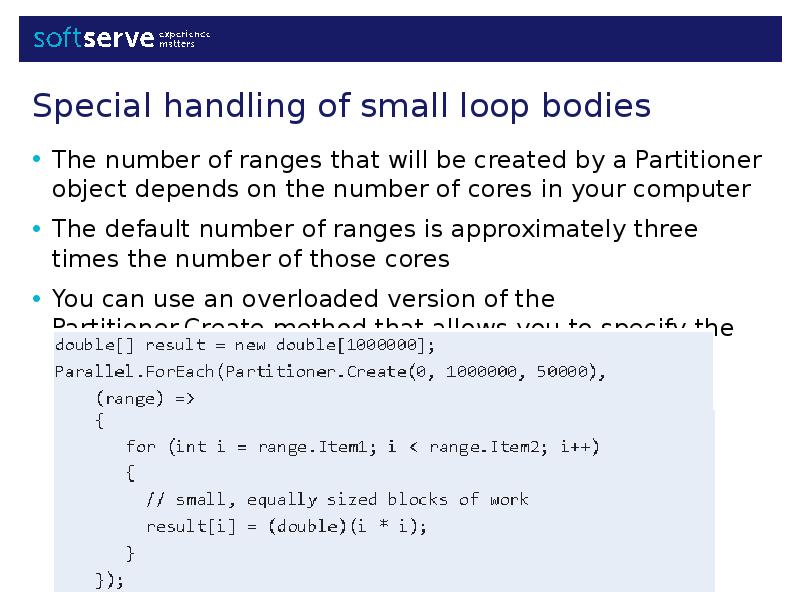

- 35. The number of ranges that will be created by a Partitioner

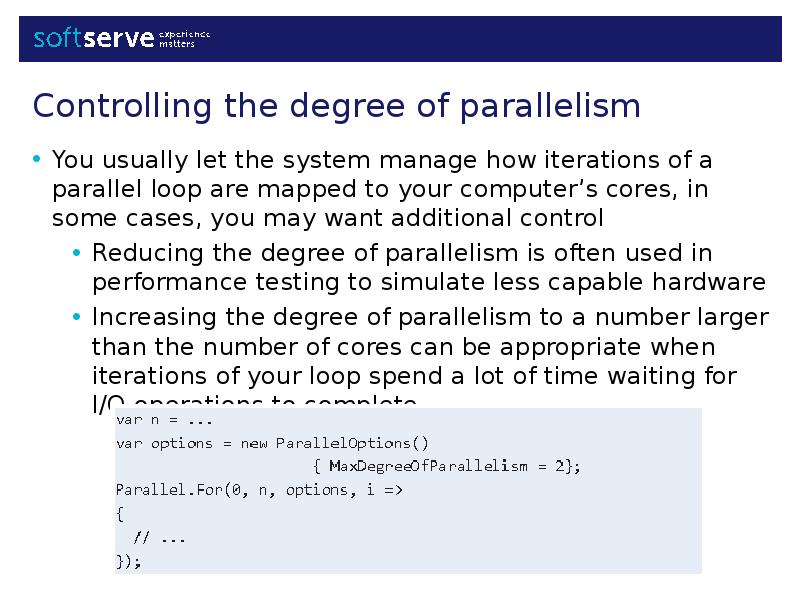

- 36. You usually let the system manage how iterations of a parallel

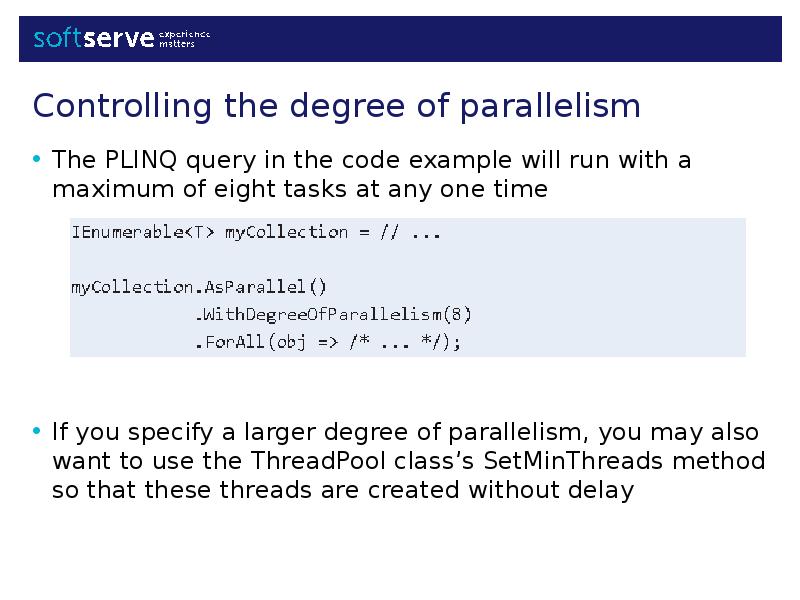

- 37. The PLINQ query in the code example will run with a

- 38. Sometimes you need to maintain thread-local state during the execution of

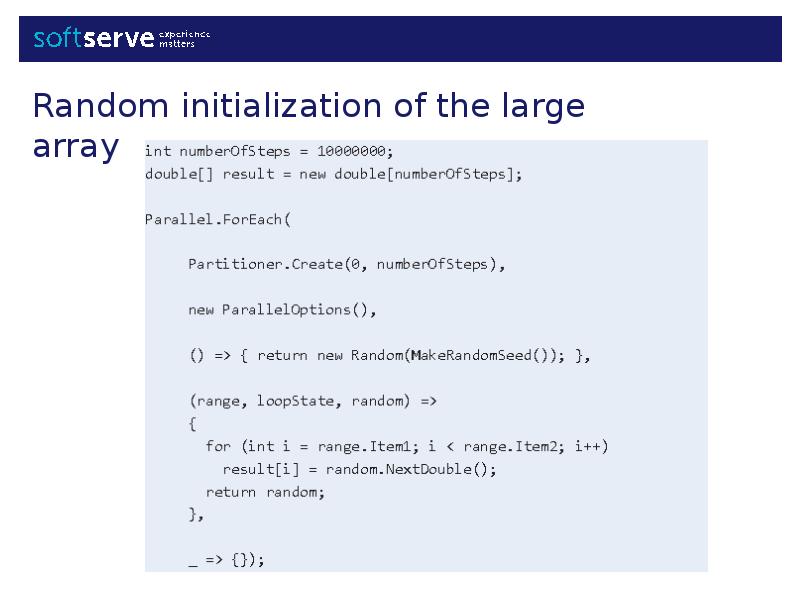

- 39. Random initialization of the large array

- 40. Calling the default Random constructor twice in short succession may use

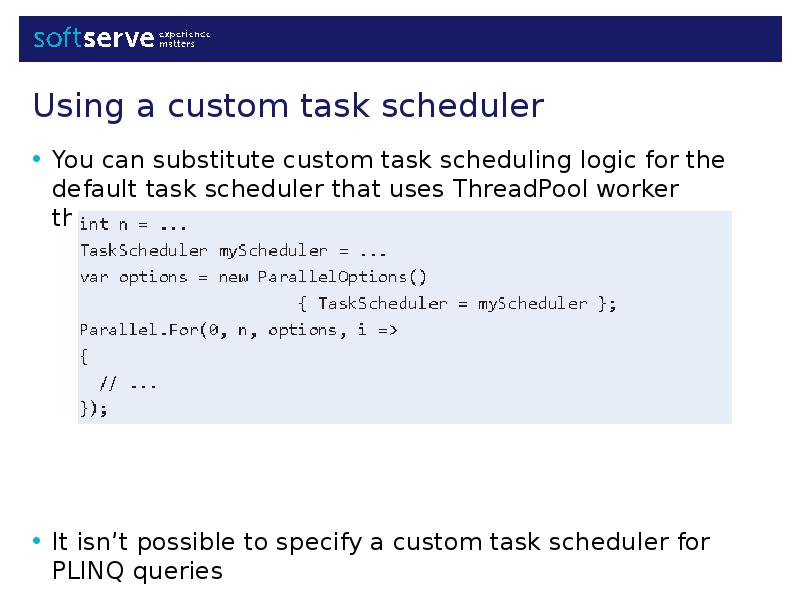

- 41. You can substitute custom task scheduling logic for the default task

- 42. Step size other than one Anti-Patterns

- 43. Adaptive partitioning Parallel loops design notes

- 44. Introduction to Parallel Programming Introduction to Parallel Programming Parallel Loops Parallel

- 45. Parallel programming patterns

- 46. The pattern is more general than calculating a sum The

- 47. Sequential version Calculating a sum

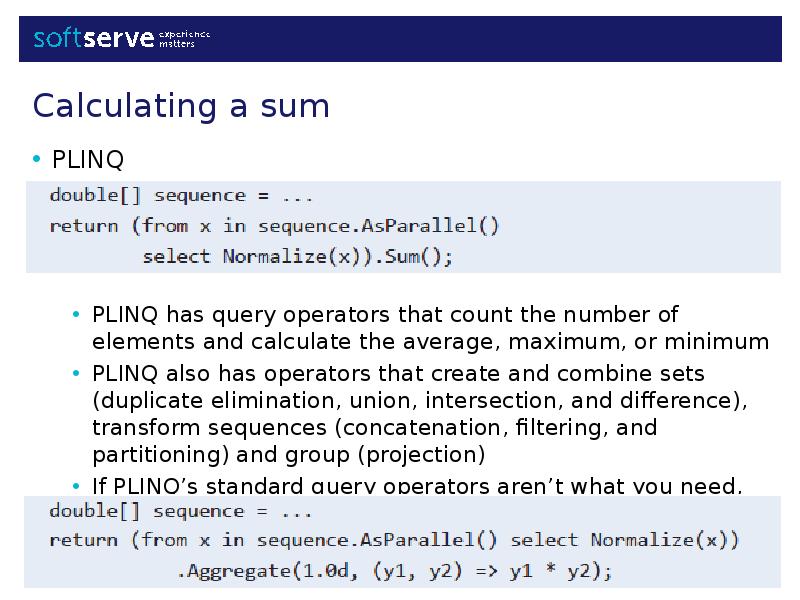

- 48. PLINQ Calculating a sum

- 49. PLINQ is usually the recommended approach Parallel aggregation pattern in .NET

- 50. The PLINQ Aggregate extension method includes an overloaded version that allows

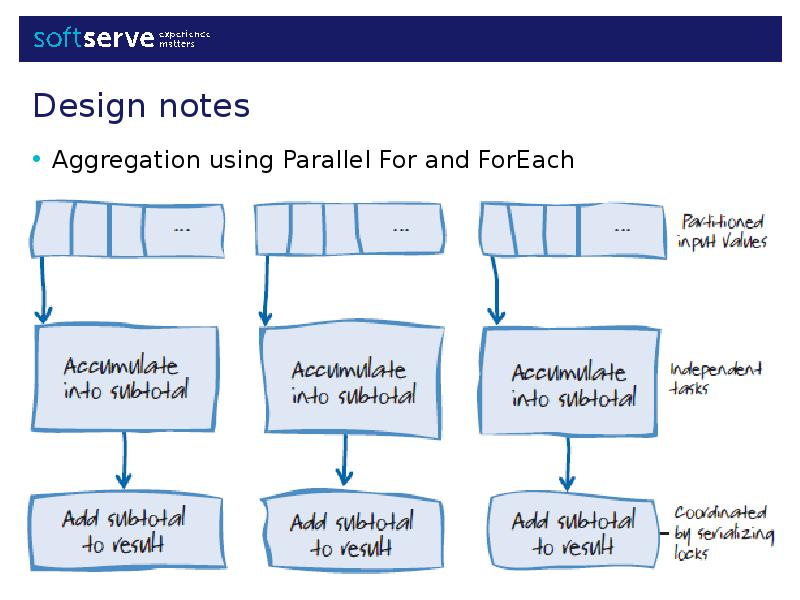

- 51. Aggregation using Parallel For and ForEach Design notes

- 52. Aggregation in PLINQ does not require the developer to use locks

- 53. Task Parallel Library Data Parallelism Patterns

- 54. Скачать презентацию

Слайды и текст этой презентации

Скачать презентацию на тему Task Parallel Library. Data Parallelism Patterns можно ниже:

Похожие презентации